Introduction

As organizations continue to mature their cloud operating models, many of the principles and practices that have helped with the application delivery lifecycle have been adopted for infrastructure and platform solutions. Reconciling the observed state against the desired state from some source of truth is a staple of modern continuous deployment, however, this is often focused on workloads and configuration within a Kubernetes context. With infrastructure as code commonly represented in a DSL and applied by separate workflows, the mechanisms to orchestrate infrastructure, platforms and applications often sit apart.

Consolidating these processes to operate and execute in the same way provides benefits for consistency, conformity and reducing cognitive overhead. Having a common method for manifesting the desired state of our cloud estates is increasingly achievable through extending the Kubernetes API with Custom resources and controllers to represent cloud resources and constructs that can then adhere to the same practices and processes that already exist for application delivery.

As cloud providers move to adopt this method for managing infrastructure and platform lifecycles, we can begin to incorporate such resources into our deployment patterns and have a consistent, declarative representation of entire cloud environments.

Google Cloud provide services that facilitate this approach to managing cloud resources through Kubernetes objects. Alongside this, the reconciliation process is managed to ensure that the state is kept in sync with the source of truth in source control.

This series of posts will walk through how to use Google Cloud services for both Fleet management, cluster and platform lifecycle, as well as application deployments all through Kubernetes resource definitions and the reconciler pattern.

Config Connector

By enabling Config Connector as a GKE addon, Google Cloud resources can be created and managed through Kubernetes objects. Config Connector provides Kubernetes Custom Resource Definitions (see resource reference) and Controllers to reconcile the Google Cloud environment with the desired state.

Most Google Cloud resources are available in Config Connector, creating opportunities for powerful compositions where infrastructure, platforms and application definitions coexist. One example is when working with Workload Identity, where the required Cloud IAM resources can be managed alongside the Kubernetes ServiceAccount and the application requiring the permissions. This creates a lot of possibilities for how infrastructure and applications can be packaged and lifecycled together, for example, applications that require a cache can deploy a RedisInstance or MemcacheInstance to create the dependent cloud resource, or deploy a database using a SQLInstance (and SQLDatabase), SpannerInstance (and SpannerDatabase) or FirestoreIndex. For event-driven applications a PubSubTopic, PubSubSchema and PubSubSubscription can be deployed alongside the application as well as the required IAMPolicy for Workload Identity permissions.

───────┬──────────────────────────────────────────────────────────────────────────────────────────────────────────

│ File: wi-example.yaml

───────┼──────────────────────────────────────────────────────────────────────────────────────────────────────────

1 │ apiVersion: pubsub.cnrm.cloud.google.com/v1beta1

2 │ kind: PubSubSubscription

3 │ metadata:

4 │ name: pubsubsubscription-example

5 │ spec:

6 │ topicRef:

7 │ name: pubsub-topic-example

8 │ ---

9 │ apiVersion: pubsub.cnrm.cloud.google.com/v1beta1

10 │ kind: PubSubTopic

11 │ metadata:

12 │ name: pubsub-topic-example

13 │ ---

14 │ apiVersion: iam.cnrm.cloud.google.com/v1beta1

15 │ kind: IAMServiceAccount

16 │ metadata:

17 │ name: iampolicy-dep-workloadidentity

18 │ spec:

19 │ displayName: Example Service Account

20 │ ---

21 │ apiVersion: iam.cnrm.cloud.google.com/v1beta1

22 │ kind: IAMPolicy

23 │ metadata:

24 │ labels:

25 │ label-one: value-one

26 │ name: iampolicy-sample-pubsubadmin

27 │ spec:

28 │ resourceRef:

29 │ kind: PubSubTopic

30 │ name: iampolicy-dep-pubsubadmin

31 │ bindings:

32 │ - role: roles/editor

33 │ members:

34 │ - serviceAccount:iampolicy-dep-workloadidentity@${PROJECT_ID?}.iam.gserviceaccount.com

35 │ ---

36 │ apiVersion: iam.cnrm.cloud.google.com/v1beta1

37 │ kind: IAMPolicy

38 │ metadata:

39 │ name: iampolicy-sample-workloadidentity

40 │ spec:

41 │ resourceRef:

42 │ kind: IAMServiceAccount

43 │ name: iampolicy-dep-workloadidentity

44 │ bindings:

45 │ - role: roles/iam.workloadIdentityUser

46 │ members:

47 │ - serviceAccount:${PROJECT_ID?}.svc.id.goog[default/iampolicy-dep-workloadidentity]

48 │ ---

49 │ apiVersion: v1

50 │ kind: ServiceAccount

51 │ metadata:

52 │ name: iampolicy-dep-workloadidentity

53 │ annotations:

54 │ iam.gke.io/gcp-service-account: iampolicy-dep-workloadidentity@${PROJECT_ID?}.iam.gserviceaccount.com

───────┴──────────────────────────────────────────────────────────────────────────────────────────────────────────This fundamentally shifts the declaration, management and execution of our cloud environments into Kubernetes to become our central point for all infrastructure, platform and application states. With this, the declarative definitions of our cloud resources in Kubernetes manifests means that they are also subject to the same workflows and quality gates to ensure conformance and compliance throughout the codebase.

With GKE now administering our cloud infrastructure we can look at different patterns and topologies for bootstrapping our cloud environments and resources. As a centralized GKE cluster running Config Connector is required to administer our cloud environment, there’s a dependency on creating and maintaining that central cluster.

Config Controller

For a near no-ops approach to using GKE with Config Connector, Config Controller offers a managed service for provisioning and orchestrating Google Cloud resources through the Kubernetes API.

Config Controller is a managed offering combining GKE and Config Connector with Config Sync and Policy Controller to provide a central platform from which declarative resources can be reconciled against Google Cloud APIs. This delivers an out–of–the–box experience where a GCP-managed, best-practice GKE cluster (available in Standard and Autopilot modes) is preconfigured with Config Connector and Config Sync, requiring no further setup to start deploying Google Cloud resources.

This composition of managed platforms and tooling results in a pull-based model for orchestrating cloud resources that can scale to the organizational scope. With the state of Google Cloud resources managed through Git and GKE, the operational overhead and management can be simplified through the reuse of existing tooling and workflows for consuming GKE clusters. Not only that, this then becomes the centralized policy enforcement point for managing and securing cloud resources.

Given that most Google Cloud resources can be maintained through Config Controller and Config Connector, fleet management can coexist with application workloads as Kubernetes resources, whilst provisioning and bootstrapping clusters encapsulates the entire platform lifecycle.

In this example, Config Controller will use Config Sync to create GKE clusters using Config Connector resources. From there, the GKE Hub API will bootstrap the clusters with their own deployment of Config Sync to trigger the full reconciliation process for all platform configurations, addons and applications.

Deploying the Fleet

Config Controller

Firstly, Config Controller will act as the central point to reconcile the Fleet. This is itself a GKE cluster (either Standard or Autopilot) that is managed by Google Cloud and is preinstalled with Config Sync and Config Connector.

gcloud alpha anthos config controller create gke-config-controller \

--location=europe-west1 \

--full-management \

--project=${PROJECT_ID}The --full-management flag means this Config Controller instance will use GKE Autopilot. For the full no-ops experience, GKE Autopilot will manage the node pools and scale to accommodate all the Config Sync and Config Controller components.

At the time of writing Config Controller is in alpha, so eventually this imperative command should itself be declarative as part of a provisioning workflow. There are however Config Connector resources for creating a Config Controller instance (ConfigControllerInstance) which itself would require GKE running Config Connector, creating a chicken and egg scenario for bootstrapping clusters.

In order for Config Connector to administer Google Cloud resources, the Google Service Account will require IAM permissions depending on the scope of its management.

export SA_EMAIL="$(kubectl get ConfigConnectorContext -n config-control \

-o jsonpath='{.items[0].spec.googleServiceAccount}' 2> /dev/null)"

gcloud projects add-iam-policy-binding "${PROJECT_ID}" \

--member "serviceAccount:${SA_EMAIL}" \

--role "roles/owner" \

--condition=NoneConfig Controller is now ready to manage Google Cloud resources using Config Connector. These Config Connector resources can be applied directly to the cluster using kubectl, however, as this cluster is also running Config Sync, manifests can instead be synced to the cluster from a Git repository.

RootSync

To synchronize resources from a Git repository, Config Sync uses either a RootSync or a RepoSync resource. The difference between these is whether the resources that are reconciled are namespace-scoped.

Managing the Fleet will require a RootSync that represents the source containing all the Config Connector resources.

───────┬────────────────────────────────────────────────────────────────────────────────────────

│ File: root-sync.yaml

───────┼────────────────────────────────────────────────────────────────────────────────────────

1 │ apiVersion: Config Sync.gke.io/v1beta1

2 │ kind: RootSync

3 │ metadata:

4 │ name: root-sync

5 │ namespace: config-management-system

6 │ spec:

7 │ sourceFormat: unstructured

8 │ git:

9 │ repo: https://source.developers.google.com/p/jetstack-paul/r/gke-config-controller

10 │ branch: develop

11 │ dir: root-sync

12 │ auth: gcpserviceaccount

13 │ gcpServiceAccountEmail: config-sync-sa@jetstack-paul.iam.gserviceaccount.com

───────┴────────────────────────────────────────────────────────────────────────────────────────This repository uses Cloud Source Repositories so that Workload Identity can be used to easily manage access to the source. The RootSync stipulates what directory and branch to pull from the source repository. Here, the root-sync directory contains all resources required to create the Fleet.

$ tree root-sync

root-sync

├── GKEHubFeature.yaml

├── Service.yaml

├── dev

│ ├── ContainerCluster.yaml

│ ├── GKEHubFeatureMembership.yaml

│ └── GKEHubMembership.yaml

└── staging

├── ContainerCluster.yaml

├── GKEHubFeatureMembership.yaml

└── GKEHubMembership.yamlContainerCluster

As Config Controller can create Google Cloud resources, it can be used to itself create GKE clusters. The ContainerCluster resource represents either a Standard or Autopilot GKE cluster that can be deployed to Google Cloud. In its simplest form, a ContainerCluster can specify to use Autopilot, its location and the release channel for the GKE cluster.

───────┬────────────────────────────────────────────────────────────────

│ File: root-sync/dev/ContainerCluster.yaml

───────┼────────────────────────────────────────────────────────────────

1 │ apiVersion: container.cnrm.cloud.google.com/v1beta1

2 │ kind: ContainerCluster

3 │ metadata:

4 │ name: gke-kcc-dev

5 │ namespace: config-control

6 │ labels:

7 │ mesh_id: proj-012345678901

8 │ spec:

9 │ description: An autopilot cluster.

10 │ enableAutopilot: true

11 │ location: europe-west1

12 │ releaseChannel:

13 │ channel: RAPID

───────┴────────────────────────────────────────────────────────────────Alongside the GKE Autopilot cluster, the GKE Hub API can be represented as Config Connector resources to bootstrap the newly created cluster. As discussed in a previous blog, this approach has the advantage of being a native feature within GKE, as the creation and configuration of the cluster occur indirectly through Google Cloud APIs, not directly through the Kubernetes API.

Generally, clusters are created then requests are sent to the Kubernetes API to deploy resources that configure the cluster. Instead, the GKE Hub is used to instantiate Anthos Config Management (which uses Config Sync in the cluster), therefore no requests are sent directly to the Kubernetes API in order to bootstrap the cluster. This has the advantage of not opening the cluster up to API Server requests before it has been sufficiently initialized and hardened with cluster configuration. Instead, Config Sync can reconcile security configuration before it is made available to consumers.

GKEHubMembership

To install Anthos Features such as Anthos Config Management (ACM) or Anthos Service Mesh (ASM) on the cluster, it must be registered in the GKE Hub. Again, this can be done through a Config Connector resource (GKEHubMembership) which is deployed alongside the ContainerCluster.

───────┬─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

│ File: root-sync/dev/GKEHubMembership.yaml

───────┼─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

1 │ apiVersion: gkehub.cnrm.cloud.google.com/v1beta1

2 │ kind: GKEHubMembership

3 │ metadata:

4 │ annotations:

5 │ cnrm.cloud.google.com/project-id: jetstack-paul

6 │ config.kubernetes.io/depends-on: container.cnrm.cloud.google.com/namespaces/config-control/ContainerCluster/gke-kcc-dev

7 │ name: gkehubmembership-dev

8 │ namespace: config-control

9 │ spec:

10 │ location: global

11 │ authority:

12 │ issuer: https://container.googleapis.com/v1/projects/jetstack-paul/locations/europe-west1/clusters/gke-kcc-dev

13 │ description: gke-kcc-dev

14 │ endpoint:

15 │ gkeCluster:

16 │ resourceRef:

17 │ name: gke-kcc-dev

───────┴─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────This membership references the GKE cluster onto which GKE Hub Features can be initialized (eg. Anthos Config Management, Anthos Service Mesh). Cluster registration is necessary to incorporate any GKE cluster into the Fleet. This includes clusters that are deployed using Anthos on VMware, Anthos on Bare Metal, or attached clusters in EKS or AKS. Once any of these clusters are registered, the same GKE Hub Features can be deployed, providing a consistent experience regardless of Kubernetes distribution or environment.

GKEHubFeatureMembership

Once the cluster is registered with a GKEHubMembership, features can be enabled to deploy additional components to each member cluster using the GKEHubFeatureMembership resource.

───────┬───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

│ File: root-sync/dev/GKEHubFeatureMembership.yaml

───────┼───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

1 │ apiVersion: gkehub.cnrm.cloud.google.com/v1beta1

2 │ kind: GKEHubFeatureMembership

3 │ metadata:

4 │ name: gkehubfeaturemembership-acm-dev

5 │ namespace: config-control

6 │ annotations:

7 │ config.kubernetes.io/depends-on: gkehub.cnrm.cloud.google.com/namespaces/config-control/GKEHubMembership/gkehubmembership-dev

8 │ spec:

9 │ projectRef:

10 │ external: jetstack-paul

11 │ location: global

12 │ membershipRef:

13 │ name: gkehubmembership-dev

14 │ featureRef:

15 │ name: gkehubfeature-acm

16 │ configmanagement:

17 │ Config Sync:

18 │ sourceFormat: unstructured

19 │ git:

20 │ syncRepo: "https://source.developers.google.com/p/jetstack-paul/r/gke-config-controller"

21 │ syncBranch: "develop"

22 │ policyDir: "user-clusters/dev"

23 │ syncWaitSecs: "20"

24 │ syncRev: "HEAD"

25 │ secretType: gcpserviceaccount

26 │ gcpServiceAccountRef:

27 │ external: config-sync-sa@jetstack-paul.iam.gserviceaccount.com

28 │ policyController:

29 │ enabled: true

30 │ exemptableNamespaces:

31 │ - "kube-system"

32 │ referentialRulesEnabled: true

33 │ logDeniesEnabled: true

34 │ templateLibraryInstalled: true

35 │ auditIntervalSeconds: "20"

36 │ hierarchyController:

37 │ enabled: false

───────┴───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────This particular resource is a Config Connector representation for how to deploy and configure Anthos Config Management (ACM). Here, the spec.configmanagement describes the repository, branch and directory to sync to the cluster, along with configuration to enable Policy Controller which will be used later to enforce Constraints.

Note that the directory being synchronized is user-clusters/dev. This path can be used to reconcile different resources to the ContainerCluster from a repository depending on the environment or type of cluster. So far in this demonstration, a ‘development’ ContainerCluster (GKE Autopilot) was created with a GKEHubFeatureMembership to sync all ‘development’ cluster configurations using ACM.

This pattern can scale to encompass all GKE clusters in the Fleet, each represented as resources along with the accompanying ACM sync. To create a new cluster that now represents the ‘staging’ environment, a ContainerCluster that deploys a GKE Standard cluster, along with a GKEHubMembership and GKEHubFeatureMembership to sync the requisite ‘staging’ configuration can be added to the root-sync directory and applied by the RootSync in Config Controller.

───────┬──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

│ File: root-sync/staging/ContainerCluster.yaml

───────┼──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

1 │ apiVersion: container.cnrm.cloud.google.com/v1beta1

2 │ kind: ContainerCluster

3 │ metadata:

4 │ name: gke-kcc-staging

5 │ namespace: config-control

6 │ labels:

7 │ mesh_id: proj-993897508389

8 │ spec:

9 │ location: europe-west2

10 │ initialNodeCount: 3

11 │ nodeConfig:

12 │ spot: true

13 │ nodeLocations:

14 │ - europe-west2-a

15 │ - europe-west2-b

16 │ - europe-west2-c

17 │ monitoringConfig:

18 │ enableComponents:

19 │ - SYSTEM_COMPONENTS

20 │ managedPrometheus:

21 │ enabled: true

22 │ workloadIdentityConfig:

23 │ workloadPool: jetstack-paul.svc.id.goog

24 │ networkingMode: VPC_NATIVE

25 │ networkRef:

26 │ external: main

27 │ subnetworkRef:

28 │ external: main-subnet

29 │ ipAllocationPolicy:

30 │ servicesSecondaryRangeName: servicesrange

31 │ clusterSecondaryRangeName: clusterrange

32 │ clusterAutoscaling:

33 │ enabled: true

34 │ autoscalingProfile: BALANCED

35 │ resourceLimits:

36 │ - resourceType: cpu

37 │ maximum: 100

38 │ minimum: 10

39 │ - resourceType: memory

40 │ maximum: 1000

41 │ minimum: 100

42 │ releaseChannel:

43 │ channel: RAPID

44 │ addonsConfig:

45 │ networkPolicyConfig:

46 │ disabled: false

47 │ dnsCacheConfig:

48 │ enabled: true

49 │ configConnectorConfig:

50 │ enabled: true

51 │ horizontalPodAutoscaling:

52 │ disabled: false

53 │ networkPolicy:

54 │ enabled: true

55 │ podSecurityPolicyConfig:

56 │ enabled: false

57 │ verticalPodAutoscaling:

58 │ enabled: true

───────┴──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

───────┬──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

│ File: root-sync/staging/GKEHubMembership.yaml

───────┼──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

1 │ apiVersion: gkehub.cnrm.cloud.google.com/v1beta1

2 │ kind: GKEHubMembership

3 │ metadata:

4 │ annotations:

5 │ cnrm.cloud.google.com/project-id: jetstack-paul

6 │ config.kubernetes.io/depends-on: container.cnrm.cloud.google.com/namespaces/config-control/ContainerCluster/gke-kcc-staging

7 │ name: gkehubmembership-staging

8 │ namespace: config-control

9 │ spec:

10 │ location: global

11 │ authority:

12 │ issuer: https://container.googleapis.com/v1/projects/jetstack-paul/locations/europe-west2/clusters/gke-kcc-staging

13 │ description: gke-kcc-staging

14 │ endpoint:

15 │ gkeCluster:

16 │ resourceRef:

17 │ name: gke-kcc-staging

───────┴──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

───────┬──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

│ File: root-sync/staging/GKEHubFeatureMembership.yaml

───────┼──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

1 │ apiVersion: gkehub.cnrm.cloud.google.com/v1beta1

2 │ kind: GKEHubFeatureMembership

3 │ metadata:

4 │ name: gkehubfeaturemembership-acm-staging

5 │ namespace: config-control

6 │ annotations:

7 │ config.kubernetes.io/depends-on: gkehub.cnrm.cloud.google.com/namespaces/config-control/GKEHubMembership/gkehubmembership-staging

8 │ spec:

9 │ projectRef:

10 │ external: jetstack-paul

11 │ location: global

12 │ membershipRef:

13 │ name: gkehubmembership-staging

14 │ featureRef:

15 │ name: gkehubfeature-acm

16 │ configmanagement:

17 │ Config Sync:

18 │ sourceFormat: unstructured

19 │ git:

20 │ syncRepo: "https://source.developers.google.com/p/jetstack-paul/r/gke-config-controller"

21 │ syncBranch: "develop"

22 │ policyDir: "user-clusters/staging"

23 │ syncWaitSecs: "20"

24 │ syncRev: "HEAD"

25 │ secretType: gcpserviceaccount

26 │ gcpServiceAccountRef:

27 │ external: config-sync-sa@jetstack-paul.iam.gserviceaccount.com

28 │ policyController:

29 │ enabled: true

30 │ exemptableNamespaces:

31 │ - "kube-system"

32 │ referentialRulesEnabled: true

33 │ logDeniesEnabled: true

34 │ templateLibraryInstalled: true

35 │ auditIntervalSeconds: "20"

36 │ hierarchyController:

37 │ enabled: false

───────┴──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────Note: Resources that are managed by Anthos Config Management can include config.kubernetes.io/depends-on annotations to ensure the correct ordering to manage resource dependencies.

https://cloud.google.com/anthos-config-management/docs/how-to/declare-resource-dependency

Note: When deleting a Config Connector resource the associated Google Cloud resource is deleted by default. To keep the Google Cloud resource if the Kubernetes resource is deleted, add the cnrm.cloud.google.com/deletion-policy: abandon annotation.

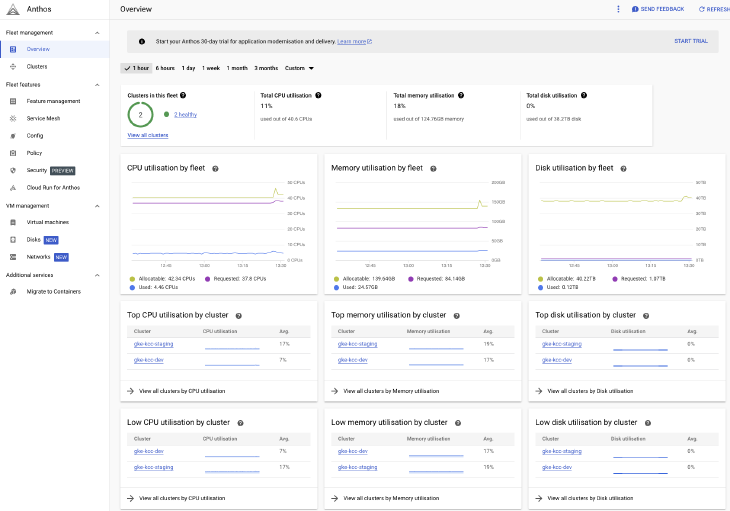

With both GKE clusters created and registered to the Fleet, the Anthos Dashboard then becomes the central interface for all cluster metrics, configuration and policy compliance.

Fleet Management Overview

Anthos Service Mesh

As well as configuring Anthos Config Management through the GKE Hub API and Config Connector resources, Anthos Service Mesh (ASM) is another feature that can be enabled on the Fleet member clusters. Similarly associating the Anthos Feature with the Fleet Membership, ASM can be installed on the cluster either with a managed or in-cluster control plane. When using the managed offering, Google applies the recommended control plane configuration and enables automatic data plane management (upgrades of sidecar proxies with workload restarts), with upgrades in-line with the ASM release channel.

Note: GKE Autopilot only supports Managed Anthos Service Mesh.

───────┬────────────────────────────────────────────────────────────────

│ File: root-sync/dev/GKEHubFeatureMembership.yaml

───────┼────────────────────────────────────────────────────────────────

...

38 │ ---

39 │ apiVersion: gkehub.cnrm.cloud.google.com/v1beta1

40 │ kind: GKEHubFeatureMembership

41 │ metadata:

42 │ name: gkehubfeaturemembership-asm-dev

43 │ namespace: config-control

44 │ spec:

45 │ projectRef:

46 │ external: jetstack-paul

47 │ location: global

48 │ membershipRef:

49 │ name: gkehubmembership-dev

50 │ featureRef:

51 │ name: gkehubfeature-asm

52 │ mesh:

53 │ management: MANAGEMENT_AUTOMATIC

───────┴────────────────────────────────────────────────────────────────Note: By default, Anthos Service Mesh doesn’t install an Ingress Gateway. It is recommended to deploy and manage the control plane and gateways separately.

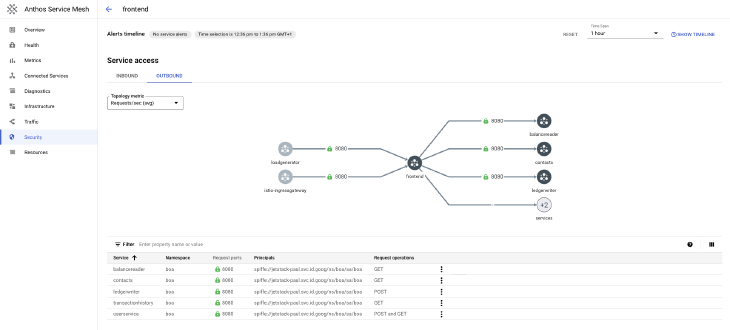

Once Anthos Service Mesh is installed, the topology and service-to-service communication is visible in the ASM dashboard within the Google Cloud Console. This captures every mesh deployed within the Fleet, giving a unified view of every configuration, service behavior and telemetry.

Anthos Service Mesh Security

With Anthos Service Mesh installed in the clusters, the entire cluster lifecycle and fleet management are being orchestrated by Config Controller and Config Connector resources. This establishes the core components of the GKE clusters, with either Autopilot or Standard GKE provisioned, Anthos Config Management enabled and Anthos Service Mesh deployed.

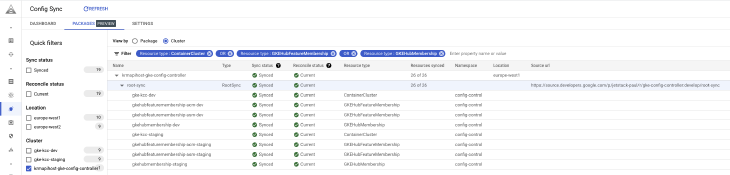

Config Controller is now syncing all Config Connector resources to maintain the Fleet. These resources can be seen in the Anthos Dashboard, with their status

Anthos Config Management RootSync

In the next post, each GKE cluster in the Fleet will bootstrap it’s own addons and applications using ACM and FluxCD, as well as Constraints to enforce policies and Google Managed Prometheus for monitoring.

At Jetstack Consult, we’re often helping customers adopt and mature their cloud-native and Kubernetes offerings. If you’re interested in discussing how Google Cloud Anthos and GKE can help your digital transformation, get in touch and see how we can work together.

Paul is a Google Cloud Certified Fellow with a focus on application modernisation, Kubernetes administration and complementing managed services with open-source solutions. Find him on Twitter & LinkedIn.

When our experts are your experts, you can make the most of Kubernetes

2024 Machine Identity Management Summit

Help us forge a new era of cybersecurity

Looking to vulcanize your security with an identity-first strategy? Register today and save up to $100 with exclusive deals. But hurry, this sale won't last!