The machine identities powering your digital transformation are at risk. They could be cheetahs.

Genetic variability is a measure. It provides quantitative insight into the likelihood that individual genotypes—the collection of genes that make you “you” and me “me” in all our glorious uniqueness—will vary from one another in each population. As a baseline example, most of our planet’s animal species have variability in about 20 percent of their genes. About one-fifth of their genes can come in more than one form, just as human eyes can be blue, brown, green, or somewhere in between.

Consider the dizzying menagerie of dogs competing at Westminster Kennel Club every year. Poodles, Labradors, boxers, and mastiffs all look very different, but they have the same genes. The differences in appearance result from domesticated dogs having a higher-than-average genetic variability of about 27 percent. They were bred this way on purpose. This variability determines whether a given breed is lean or round, a sprinter or an endurance specialist, or sports short, curly fur versus long, flowing Lhasa Apso locks. Human populations, by comparison, vary from one another by only about 5.4 percent. That’s why all humans look largely the same, while dog breeds appear so radically diverse.

The cheetah, on the other hand, is not so lucky. Of all land mammals, cheetahs have the lowest genetic variability, with only one percent of their genes having variable forms. In essence, more than 99 percent of all cheetahs look the same, smell the same, walk the same and sound the same. Even long-tenured zookeepers have trouble telling individual cheetahs apart. This “sameness” was likely driven by a series of environmental bottlenecks, such as droughts, disease, and famines over millions of years, so that populations of African cheetahs were repeatedly whittled down to a small, partially inbred group of struggling survivors.

Why does it matter? In many cases, variability within a species safeguards its viability. Environments change, going from cold to hot and from wet to dry. Diseases like COVID-19 can suddenly flame up, with no previous exposure to test defense mechanisms and grant immunity. If a highly invariable population is sensitive to these changes or vulnerable to the new disease without a healthy supply of non-vulnerable variable types in their midst, the results can be disastrous.

The world’s fastest, sleekest, and least genetically diverse land mammal is just one quirky novel virus from becoming extinct. “Genetic variability” is the reserve store—the biological life raft—that would allow a species like the cheetah to weather nature’s storms.

Global Security Report: Rapid Increase in Ransomware Threats Drives Need for Security Controls That Speed the Kill Chain

The Need for Crypto-Agility

Surprisingly, cheetah evolution and survival can tell us a lot about the need for agility in modern cryptographic systems, the protocols and functions that allow machine-to-machine authentication and encryption. For the purposes of this paper we’ll define crypto-agility in a way that addresses two interrelated but different problem areas. First, the problems:

- On January 9 of this year, a Threatpost headline made an ominous announcement: “Exploit Fully Breaks SHA-1, Lowers the Attack Bar.” The ensuing article detailed a proof-of-concept attack that “fully and practically” broke the code signing encryption method called Secure Hash Algorithm, or SHA-1. Organizations like the United States’ National Institute of Standards and Technology (NIST) and the multinational Internet Engineering Task Force (IETF) removed SHA-1 as a supported algorithm two years ago, issuing warnings for security practitioners to deprecate its usage in favor of newer, more robust algorithms like SHA-2 and SHA-256. But these things take time: It wasn’t until June of last year that Apple browser sessions in iOS 13 and macOS Catalina actively rejected SHA-1 signed certificates and warned its end users of these vulnerabilities.

- On March 4, ZDNet announced an equally unsettling cryptography-related headline, with “Let's Encrypt to Revoke 3 Million Certificates on March 4 Due to Software Bug.” Let’s Encrypt is a certificate authority (CA) that recently celebrated the issuance of its one-billionth Transport Layer Security (TLS) certificate, a method machines use—whether Apache web servers, F5 load balancers or the iPad you’re reading this on—to authenticate and trust each other over the internet. Let’s Encrypt had discovered a bug in its own internally developed back-end software that nullified a legally required feature called Certificate Authority Authorization (CAA). This feature allows domain owners to specify certain trusted CAs as issuers of TLS certificates for their domains, a means of assuring both business process continuity and security configurations for the certificates that authenticate machine-to-machine connections on the internet. Over 3 million certificates with this flaw would need to be discovered and reissued, and Let’s Encrypt created a list of impacted certificate serial numbers that its customers needed to immediately begin replacing.

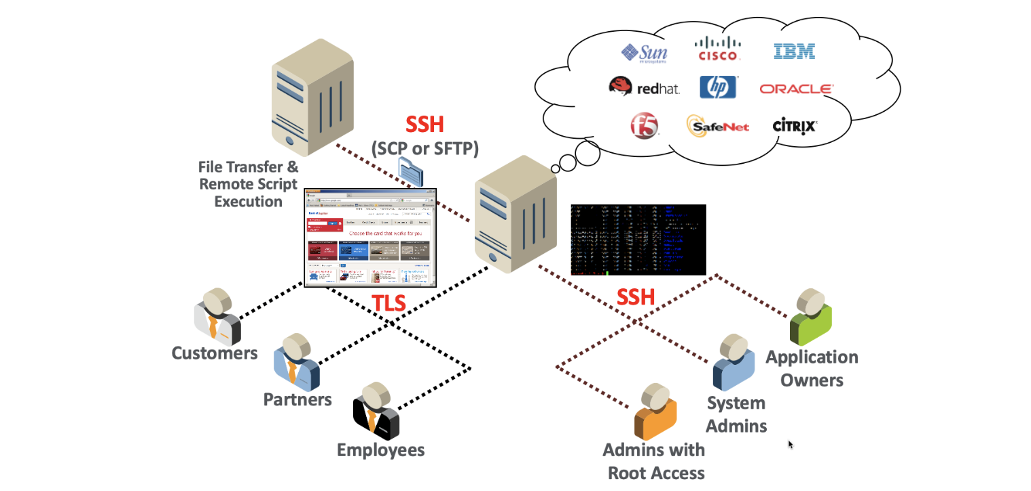

Why do these reports matter? Because machine identities matter. Everyone intuitively knows (but often fails to recognize) that networks large and small—from VPNs to the world wide web—are populated by two kinds of entities, people, and machines. “People” we can relate to, but “machines” are more difficult to understand. They include the obvious bits of physical and virtual hardware, but also include services and serverless workloads, algorithms and code running in containers. While people use usernames and passwords to authenticate themselves on these networks, machines use machine identities, such as TLS and other kinds of X.509-based certificates, Secure Shell (SSH) keys, code signing keys and API keys.

The two instances reported in Threatpost and in ZDNet, when viewed in the context of a world where machine identities are increasingly and unambiguously critical, together lay out the need for “crypto-agility.” Crypto-agility is, simply put, the ability for an organization to adapt fast. That adaptation may be needed to respond to a cryptographic threat, as in the case of SHA-1 or vulnerabilities like Heartbleed, or it may be required because of issues with the cryptographic supply chain, as in the case of Let’s Encrypt, which provides cryptographic services in the form of TLS certificates.

An example of two kinds of machine identities at work, with TLS certificates and SSH keys represented.

Importantly, these adaptations must be made rapidly and without impacting the applications and services they support. As TechTarget reports, “The idea behind crypto-agility is to adapt to an alternative cryptographic standard quickly without making any major changes to infrastructure.” In a world of digital transformation, the application is king. It can’t be pulled offline and rebuilt because of an issue arising from underlying cryptography or a certificate authority.

Crypto-Agility Requires Visibility, Intelligence and Automation

Achieving crypto-agility is more difficult than it first appears, especially for large organizations. Most don’t know how many keys or certificates they have, where they are in their infrastructure or who on their staff has the access and knowledge to correct them.

Certificate-related website or service outages graphically illustrate the fact that all TLS certificates have an expiration date. When a certificate expires, the site or service is untrusted by modern software, can be rejected or fail to respond altogether. Several recent incidents demonstrate this:

- In February 2020, the popular Microsoft Teams remote productivity suite stranded 20 million users for more than two hours due to an expired certificate.

- In May 2019, business networking site LinkedIn suffered a certificate-related outage that impacted 610 million users.

- In December 2018, 30 million customers of O2’s cellular service in the U.K. lost voice and digital service when a certificate expired in its underlying Ericsson switching equipment. Another 40 million users of SoftBank in Japan lost service for the same reason.

While the maximum duration of a certificate has typically been three years, browser developers like Apple have increasingly pushed for shorter durations to add more frequent review of the security configurations and the business ownership of machine-to-machine connections. As Computer Business Review recently put it: “Apple is planning to more than halve how long its Safari browser will trust TLS certificates, cutting the time to just 13 months, putting fresh pressure on organizations to get their certificate management practices in shape.” The article goes on to say “As of September 1, 2020, Apple is setting a hard trust limit of 398 days. (The current acceptable duration is 825 days). Certificates issued on or after that date with term beyond 398 days will be distrusted in Apple products.”

While this is good security thinking, Apple’s decision will most likely exacerbate the “certificate expiration” problem experienced by Microsoft, O2, LinkedIn, Equifax, and many others. Large organizations simply don’t have the visibility, the intelligence (in the form of specific insight into the “who, what, why and where” of TLS certificates and keys) or the automation to manage the tens of thousands of certificates their sites, services and the underlying machines rely on.

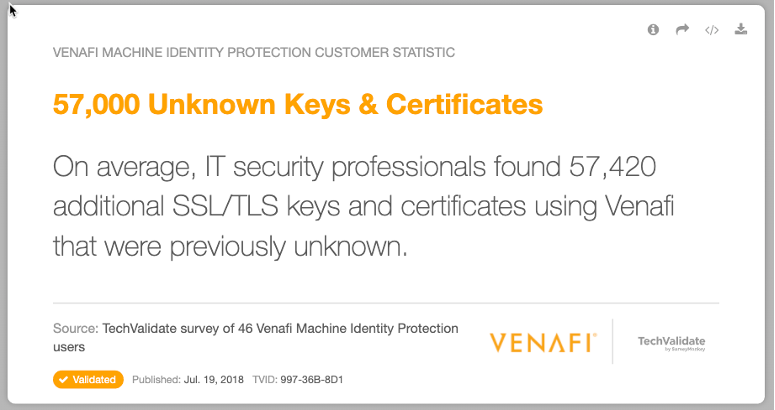

TLS certificates are used liberally throughout the network infrastructure that powers digital transformation, from application servers to load balancers to printers and everywhere in between. So liberally, in fact, that their location and configuration is often unknown. TechValidate, a service that captures feedback and comments from information technology users reports that “On average, IT security professionals found 57,420 additional SSL/TLS keys and certificates using Venafi [a machine identity protection platform] that were previously unknown.”

But certificate-related outages due to expired certificates are just one symptom of a much larger problem that stems from the sprawl of machine identities, their ubiquity in all aspects of life and their inherent complexity. Visibility, intelligence, and automation address the problem in these ways:

Visibility

The first step in any security framework is to inventory the assets you have. With machine identities, information security teams need to ask: Where are the certificates our web servers, applications and services depend on? Where are the SSH keys our system administrators have created to authenticate from one machine to another? Are private code signing keys being left on developer’s servers, only to be stolen and used to sign malware, as in the ShadowHammer attack on ASUS in Spring 2019 that infected a million computers belonging to the manufacturer’s customers? Where are the certificates and keys for mobile devices and IoT devices? Can we account for every machine-to-machine authentication event in the enterprise and be assured that none run invisibly?

“Digital Visibility” is not a trivial thing for small companies—let alone Global 5000 enterprises. These organizations need to find machine and device identities across their far-flung networks, whether on traditional web servers, security devices, load balancers or cloud-based databases. Visibility is needed across cloud containers and virtual systems, the applications and services they’re running and the connections they’re making to other systems, whether those systems are in public or private clouds or in traditional on-premises networks.

Paul Haywood, former head of information security at Barclays, offers this cautionary tale about SSH keys that authenticate and encrypt connections for back-end systems and their administrators. SSH keys provide password-less, non-expiring replacements for system access, and can be copied, shared and transported with ease. “We suspected at the time Barclays had close to 500,000 SSH keys, both public and private, some with root access, throughout our various networks, so we launched an inventory and intelligence-gathering effort across all of them. A few months later we had accounted for nearly 4 million keys. After a year of dedicated effort from the Barclay’s IT team, the number came down to a manageable and sustainable 600,000 keys. We understood the risk but had no idea of its enormity.”

Intelligence

When an organization has accounted for all the machine identities and their machine-to-machine authentication events, they need intelligence about each connection. Is it relying on a potentially forged or fraudulent certificate? Is it ready to expire, leaving gaps in our visibility, unable to discern risks or weakness in your security posture? Were TLS certificates issued by the appropriate CAs? Recall the Let’s Encrypt example used earlier: If it’s not an allowed CA, per your information security policy, can you find it where it has issued certificates and replace them?

Even worse, is a certificate responsible for not just delivering applications and content to end users, but for enabling your network security tools? The Equifax hack of 2017 can trace its cause to an exploited vulnerability in Apache Struts. But why did it go undetected for nearly three months? Equifax had traffic inspection tools that should have revealed the anomalous traffic, but that equipment relied on a TLS certificate to open and inspect encrypted traffic. A report by U.S. House of Representatives Committee on Oversight and Government Reform showed that the equipment’s TLS certificate had expired 10 months before the breach. As a result, it was blind to network anomalies, and this blindness allowed the attackers to remain in the network, pivoting to over 50 different databases, for over 70 days.

Knowing which of your network systems and tools—whether they are delivery-focused or security-focused—rely on certificates and keys is critical intelligence. This is true when an attack surface needs to be analyzed or when expenses need to be prioritized.

Automation

Renewing, revoking, or updating digital keys and certificates can be a laborious and time-consuming process. But with an automated system, expiring certificates can be detected and renewed, regardless of the CA or internal system that originated them, before they cause blind spots or operational outages. Weak or guessable SSH keys can be rotated and reinforced, without the costly delays that come from tracking down owners and system administrators.

Perhaps most importantly, the tools an enterprise’s operations and defenses rely on, like load balancers and threat protection systems, can maintain visibility into the sites and services they serve and protect. As an example: if Equifax had automated the renewal of the certificate that supported their traffic inspection system it’s entirely possible that their 2017 breach would have been detected in minutes rather than months.

The Pillars of Crypto-Agility

To achieve crypto-agility, enterprises must be able to respond promptly to mass certificate and key replacement events. At the same time, they must be able to demonstrate policy compliance for all certificates and identify any anomalies. This requires comprehensive visibility and detailed intelligence, as well as automation to enable replacement and renewal at machine speed and scale.

- Know where every certificate in your organization is being used. If you do not have a complete and accurate inventory of all your keys and certificates, you will not have the information you need to evaluate your potential cryptographic risks. Without this information, it will be difficult and time-consuming to find any certificates that may have been impacted by a cryptographic bug or vulnerability. For complete visibility into all your certificates, build or buy a solution that automates the discovery of every machine identity used across your entire network and that consolidates this information into a single inventory.

- Actively monitor all certificates to uncover day-to-day changes. It’s one thing to build an inventory, but it’s a different thing altogether to keep that inventory up to date so that it reflects your constantly changing network. This is especially challenging as you expand your use of cloud, IoT and DevOps technologies—all of which increase the difficulty of understanding where each certificate is installed and whether it has changed. This is critical because certain changes like cryptographic compromises can invalidate multiple certificates, which then require immediate attention. Build or buy a system that continuously monitors all your certificates, so you have the information needed to act quickly when required.

- Ensure all keys and certificates comply with corporate security policies. Machine identities protect important data and critical infrastructure, which are often governed by industry regulation and internal security policies. These policies impact functions—such as installation and usage—and help in preventing misconfigured servers, applications or key stores that may leave otherwise secure keys and certificates open to compromise. All these attributes can be controlled through the enforcement of security policies. Think back to the Let’s Encrypt bug cited earlier. Your policy for TLS certificate usage may very well require the use of the CAA function. However, applying consistent policies across certificates from multiple CAs—each of which may handle security requirements differently—often proves to be challenging. Because many organizations struggle to tie their machine identity security to an overarching security policy, NIST has released guidance for how to create proper policies for both TLS certificates (see NIST 1800-16) as well as for SSH keys (see NIST IR-7966).

- Find and replace vulnerable algorithms and key lengths. Public key cryptography relies on key length and cryptographic algorithms for security, but these attributes need to be periodically updated. Cybercriminals devote considerable resources to defeating public key cryptography, as evidenced by the SHA-1 compromise cited earlier. As computing speeds increase and crypto-breaking techniques improve, key lengths and cryptographic algorithms become more vulnerable, requiring you to migrate to more secure ones. Weak ciphers also undermine the strength of encryption and can facilitate compromises by cybercriminals.

- Use the latest, most secure protocol versions. New vulnerabilities are regularly found in encryption protocols. For example, SSL/TLS has experienced several vulnerabilities over recent years, including Heartbleed, POODLE and DROWN. To reduce your exposure to protocol vulnerabilities, develop or buy a solution that identifies the encryption protocol in use and automates the replacement of all weak or vulnerable machine identities with ones using the latest protocol.

If the current rate of growth in certificates weren’t enough reason to master crypto-agility, another is on the horizon: quantum computing. Because modern cryptography relies on mathematical algorithms can take years or even centuries for traditional computers to break, we have enjoyed a relatively secure few decades. Hash algorithms like MD-5 and SHA-1 have been replaced by stronger systems with longer key lengths. But quantum computer will provide an exponential increase in on-demand computing power, putting all know security algorithms at risk. As Rob Stubbs reported in the Cryptomathic blog, “This will break nearly every practical application of cryptography in use today, making e-commerce and many other digital applications that we rely on in our daily lives totally insecure.”

Adapting fast to new encryption methods and protocols may become a necessary survival skill.

The Pillars of CA-Agility

But what if no “cryptopocalyspe” occurs? No new bugs or vulnerabilities arise, and there are no broken hashing algorithms, like MD-5 or SHA-1. There are no compromised cryptographic libraries like the one Microsoft announced on January 20 of this year, that allows for remote code execution via spoofed TLS certificates. Even in this ideal state, organizations need to protect against CAs that have poor security management practices, go out of business or whose systems are compromised.

Alongside the requirements outlined above, organizations also need to:

- Quickly locate and replace a single certificate or group of certificates. If you’re relying on CAs to manage your certificates, you won’t have the intelligence you need to rapidly respond to a CA breach or error. CAs can provide basic information about certificates, but to act quickly, you need to know every location where a certificate is being used and who manages that device or application. So, for CA-agility, you can’t rely solely on CA certificate management. If you were unlucky enough to be included in Let’s Encrypt’s 3-million certificate bug mentioned in the opening of this paper, would you be able to find all certificates.

- Discover certificates from unauthorized CAs. Some certificate users in your organization may install certificates from free or low-cost providers (like Let’s Encrypt example) to support new business initiatives or rapid deployment requirements. To find rogue certificates, InfoSec teams need a solution that provides a comprehensive view of all certificates in use, including those in the cloud or used by third-party partners, regardless of the issuing CA.

- Seamlessly transfer policies and integrations to a new CA. Each CA management tool may apply policies and integrations differently, complicating a transition between CAs. Organizations need a key and certificate management solution that doesn’t require them to rewrite any automated processes or integrations with security infrastructure and allows them to reuse security policies and workflows when switching CAs.

- Respond rapidly to browser distrust of a CA. If your organization relies on CAs to manage all your certificates, you will limit your options if one of your CAs is distrusted. This is not an infrequent event: Google announced in 2017 its browser would no longer trust Symantec-issued certificates, a stance followed other browser makers like Microsoft. [xix] Browser makers are taking a much more active role in policing the level of trust earned by CAs. When CAs are distrusted, many customers must scramble to find another CA and to replace all their keys and certificates from the distrusted CAs.

The Challenge of Scale

If all of this makes sense, superimposing one more lens on the situation will add additional clarity and urgency—the lens of “scale.”

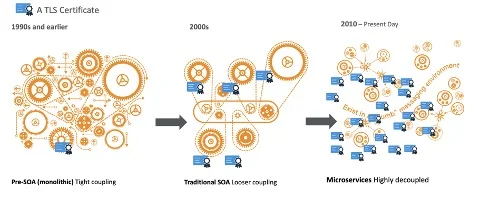

Digital transformation has radically changed the way we create, host and use online services and applications. Monolithic application architectures of the past might have used one TLS certificate to authenticate and encrypt connections between users and their application. But today, containers and mesh architectures have led to an explosion in the number of TLS certificates required to deliver a service, and a single application might require dozens or even hundreds of TLS certificates.

In the last thirty years, the number of TLS certificates required to enable and secure online applications and services has exploded.

Christian Posta, field CTO at solo.io, puts it this way in his blog on modern architectures: “As we break applications into smaller services, we increase our surface area for attacks. … Do you secure your internal applications with SSL/TLS? All the communication that we used to do inside a single monolith via local calls is now exposed over the network. The first thing we need to do is encrypt all of our internal traffic.”

DevOps practices, cloud-native development, lift-and-shift cloud patterns, IoT, virtualization and containerization are all driving the increased use of TLS certificates to provide the end-to-end encryption Posta calls for. Who is managing these certificates? How are they configured? Who is revoking or renewing them as needed?

Industry research firm Gartner, in the paper “Technology Insight for X.509 Certificate Management” states that “Organizations with roughly 100 or more X.509 certificates in use that are using manual processes typically need one full-time equivalent (FTE) to discover and manage certificates within their organizations.”

In contrast to this is the number of active domains in the world, with Verisign reporting over 326 million domain names registered across top level domains (TLDs) like .com and .net, and with the majority of these domains having many subdomains or addressable applications and services. Modern, large organizations that are engaged in digital transformation efforts are likely counting their TLS certificates not in the hundreds, but in the tens of thousands. And the amount of TLS certificates that need to be managed is increasing by the hour.

But What Does COVID-19 Have to Do with It?

The title of this blog, “Cheetahs, COVID-19 and the Demand for Crypto-Agility,” points directly at one of the issues crypto-agility is designed to solve: the ability to quickly adapt to sudden changes in circumstance.

This article is written in the shadow of 2020’s novel coronavirus and the illness it creates, COVID-19. The United States has become the epicenter of a pandemic, with more than twice the number of confirmed cases than China and with a particularly strong grip on New York City. Why has this virus been so easily transmitted?

Without going into detail, the virus evolved to fit the way humans gather, communicate, breathe, and even talk. Spike proteins on the virus surface made it easy to be embed itself in soft, wet, warm tissues. The spike proteins also contain a site that recognizes and becomes activated by an enzyme called furin, a host-cell enzyme that exists in various human organs such as the lungs. Apart from its biology, it is extraordinarily lightweight and can linger in the air for 30 minutes and travel up to 4.5 meters. In dense cities like New York, Seattle and New Orleans, where people gather in coffee shops and markets and rely heavily on mass transit, the 4.5-meter distance and 30-minute survival time are ideal tools for transmission. Biologically, the novel Coronavirus a great fit for human anatomy and behavior.

And how have we adapted to change our behavior in the face of the pandemic? What was normal behavior four weeks ago—such as plane travel or large, in-person gatherings—has been replaced by remote meetings and video conferencing. In times like these, the businesses and organizations that are nimble thrive. Those that aren’t, don’t.

As far as COVID-19 itself goes, there’s good news: Humans, unlike cheetahs, have a fair amount of genetic diversity. We are in fact three times more diverse in our genetic makeup than cheetahs. This means the likelihood of there being some random combination of genes, ribosomes or proteins in an individual’s genotype that makes them slightly less susceptible to coronavirus and COVID-19 than another is exponentially higher than if we were cheetahs. In the next year or so, one or more of those variability factors will be isolated to deliver a vaccine. At the end of the day, this variability makes us stronger (at least in this one narrow sense) than cheetahs.

Maintaining the same “strength through diversity” in our networks is equally important, though for wildly different reasons. We may still need on-premises data centers for highly privileged information—but we also need cloud platforms to support fast-charging digital transformation initiatives, such as spinning up thousands of TLS-protected instances in seconds. We may need special TLS certificates for endpoints or for thousands of suddenly remote users. In both cases, crypto-agility allows for the same policy—the same set of protective services, like well-configured TLS certificates and reliably produced and tracked SSH keys—to be assessed and enforced across increasingly diverse networks.

With diversity also comes flexibility: Good business sense tells us not to allow popular cloud platforms like Amazon AWS, Google Cloud Platform and Azure to lock us in perpetually or put us in a position where they compete for our business. Crypto-agility lets us shift between a variety of platforms and a variety of public and private CAs for any business, security or productivity reason that arises.

And if there is an equivalent of a coronavirus among machines—whether another cryptographic library bug or a compromised certificate authority—we need to be able to quickly pivot workloads, services, and configurations back to known-good, safe state. Crypto-agility provides that roadmap.

Related Posts

VIA Venafi: 8 Steps to Stopping Certificate-Related Outages

2024 Machine Identity Management Summit

Help us forge a new era of cybersecurity

Looking to vulcanize your security with an identity-first strategy? Register today and save up to $100 with exclusive deals. But hurry, this sale won't last!