Wow! It's been quite a while since I've had time to do my regular analysis of security in the Top 1 Million site, but it's happening again! As it's been over 18 months since my last report, quite a few things have changed so we have a lot to look at and a lot to report on.

The Crawl

As with all of my previous reports, the data for this report was taken from my Crawler.Ninja project. You can head over to the site and get the raw data from every single crawl that I've done daily for almost 7 years!!

One of the problems with running these crawls, storing that much data and writing these reports is that all of those activities require resources in either time, money, or both. I've struggled to set aside time for projects that don't really generate financial support for me and as a result, the security analysis of the Top 1 Million sites has taken a back seat, until now.

Venafi

The good folks over at Venafi realized I hadn't done one of my regular crawler reports for quite some time and they reached out to ask if I planed to continue them. After explaining the reason behind the reports taking a back seat, Venafi offered to sponsor two reports!

The first of these reports is being published now in Nov 2021 and the second report is planned for May 2022 so we can see what changes happen in the ecosystem over the next 6 months. I'm really grateful to Venafi for stepping up to sponsor these activities as it supports the project and gives it some funding to continue and shows their support for the community too. This report, the data used in this report and everything else will still remain free to access and read for everyone, just like it was before. Drop by the Venafi website or give them a shout on social media to say thanks!

Why Do You Need a Control Plane for Machine Identities?

November 2021

It's fair to say that a lot of things have changed over the last ~18 months and I've also updated my crawler over that time to track new metrics without having the time to talk about them. That means there should be a lot of interesting data to go over in this report, so let's get started.

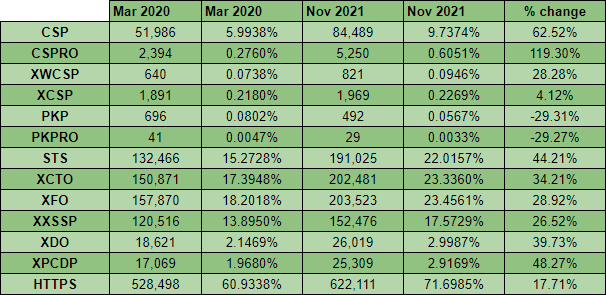

My traditional summary table shows some fantastic progress across all of the main metrics, with HTTPS continuing to see string growth along with all of the other security headers. That said, there are quite a few details worth diving in to and the new metrics to explore.

HTTPS

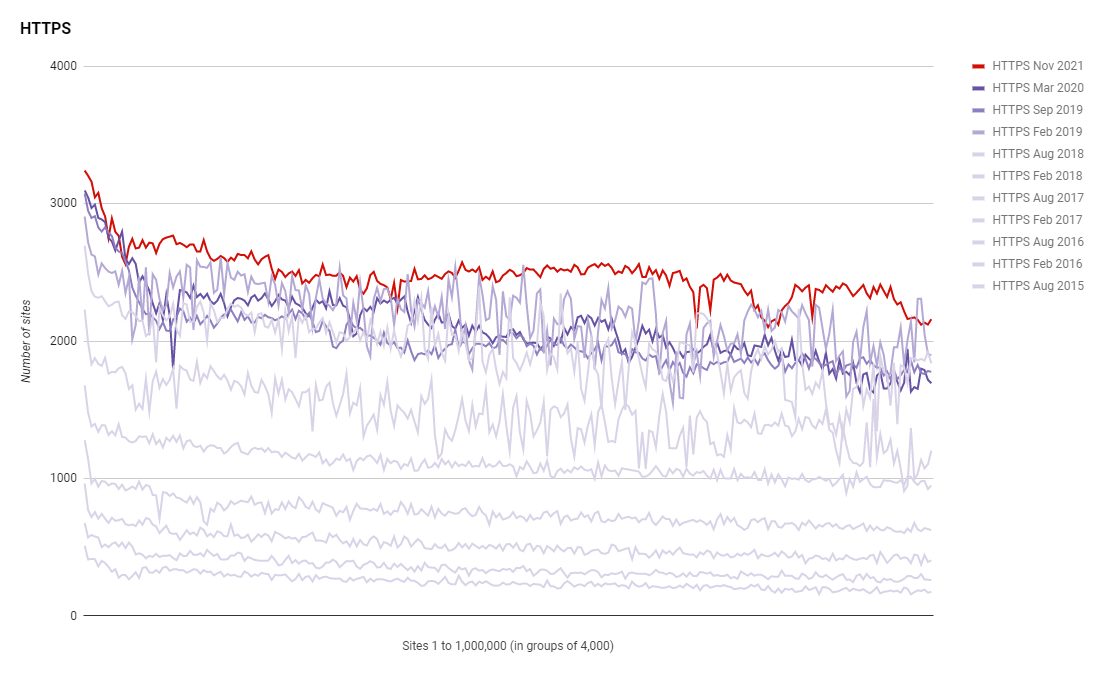

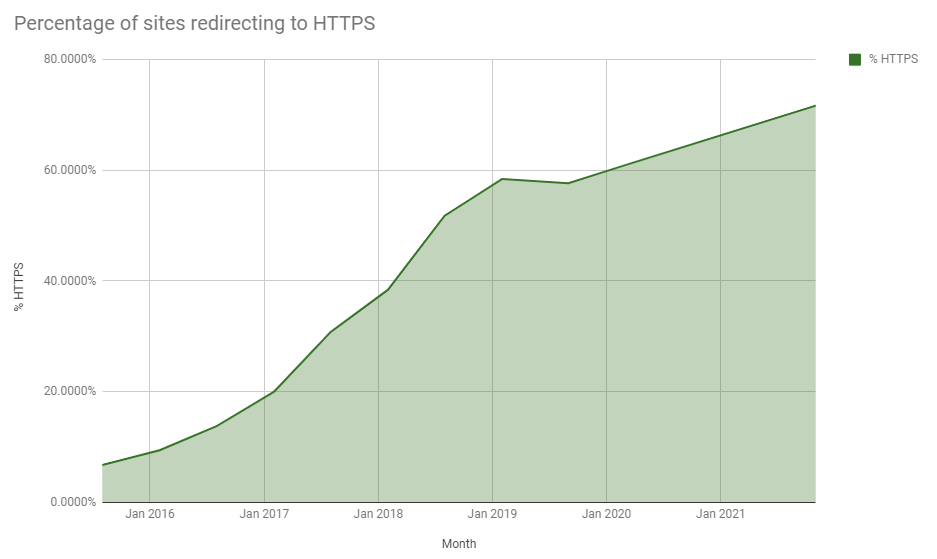

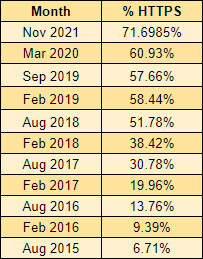

It will no doubt be expected now that we continue to see a rise in adoption of HTTPS across the Top 1 Million sites and that's exactly what we can see in this latest report.

Over the years we've seen an incredible growth in the use of HTTPS and while the rate of growth is slowing down, we're still seeing more use of HTTPS than ever before. There were a total of 622,111 sites actively redirecting the crawler to use HTTPS when the initial request was sent over HTTP, an increase from 528,498 in the last report! Looking at that as a percentage of the sites scanned that are redirecting to HTTPS and we're continuing to improve.

That's almost 72% of the sites in the Top 1 Million now actively redirecting traffic to use HTTPS!

HTTP Strict Transport Security

Of course, when talking about HTTPS we should also talk about HSTS and we've taken some big strides here too.

The use of HSTS has increased across the board and the more popular sites continue to be the most likely to deploy security features like HSTS. We're now up to 191,025 sites using HSTS from only 132,466 in Mar 2020, a 44% increase!

Certificates

The world of certificates has changed so much over the last few years that I'm planning to do a separate analysis on this metric alone at some point in the future. There are some really interesting details and changes to pick up on, but for the purpose of this blog post I'm just going to be focusing on the numbers.

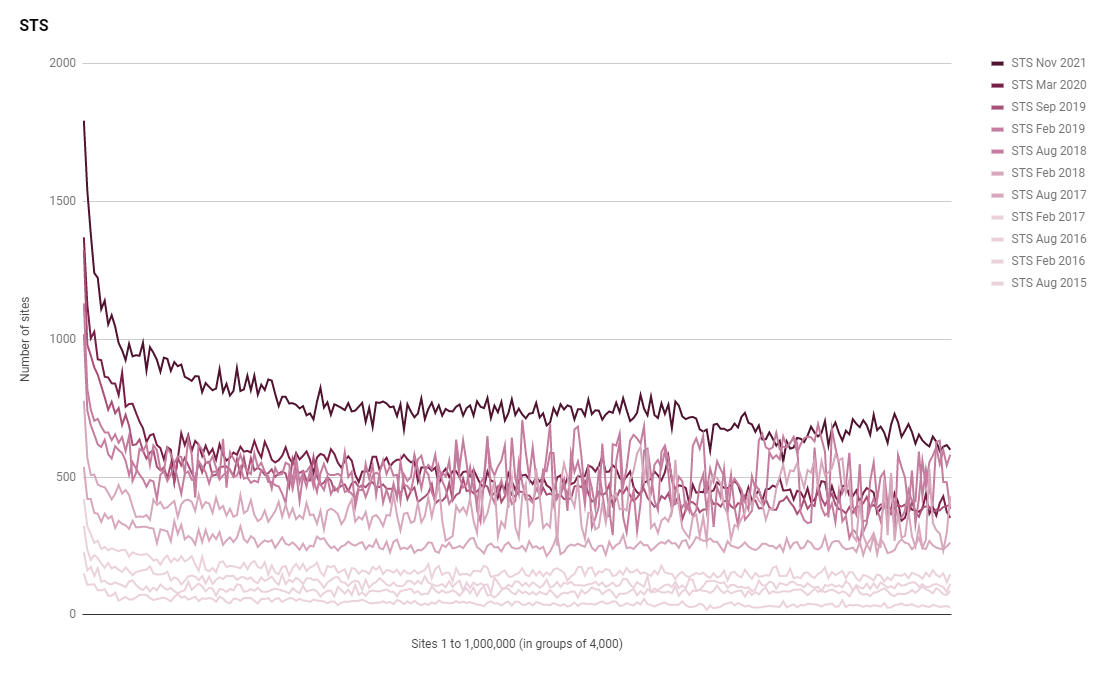

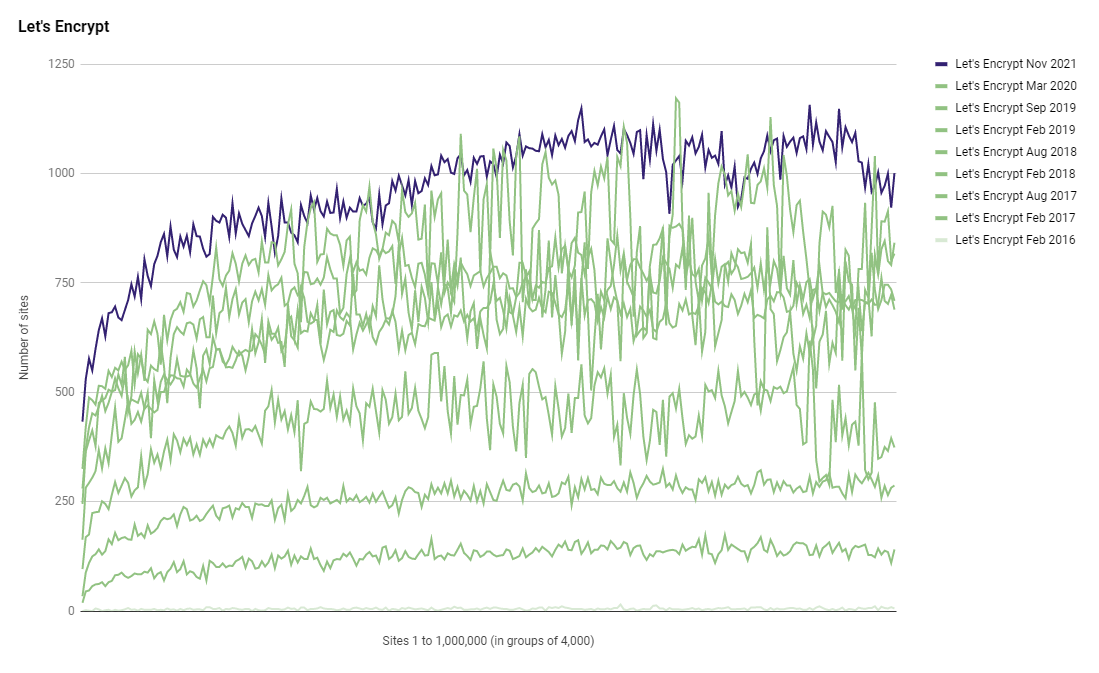

I started tracking how many sites used Let's Encrypt back in 2016, just after they started, and I've watched them go from not existing in the Top 1 Million to where they are now the largest issuer of certificates for the Top 1 Million!

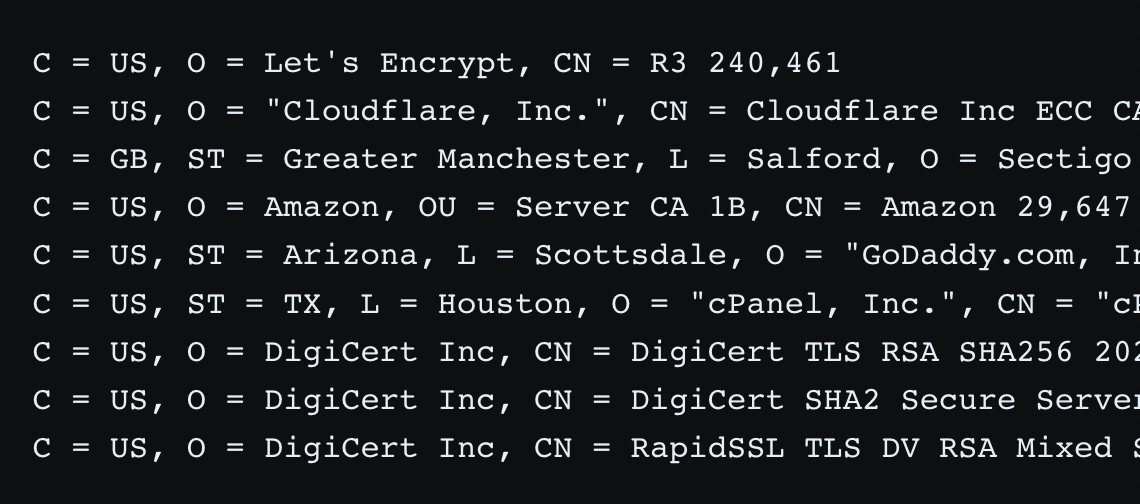

A total of 240,461 sites are now using Let's Encrypt which is almost 25% of the Top 1 Million sites. If you consider that not all 1,000,000 sites are scanned because there's always a small error rate, that represents 27.71% of sites scanned using Let's Encrypt! Looking at the Top 10 issuers of certificates, Let's Encrypt are quite a way ahead.

The next biggest issuer after Let's Encrypt is Cloudflare, who only account for a little over half the number of sites that Let's Encrypt does and then there's another significant drop the next issuer, Sectigo. Another way of looking at this is that Let's Encrypt and Cloudflare account for well over 50% of all certificates in the Top 1 Million sites between them!

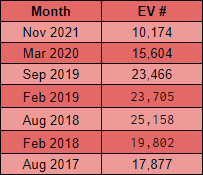

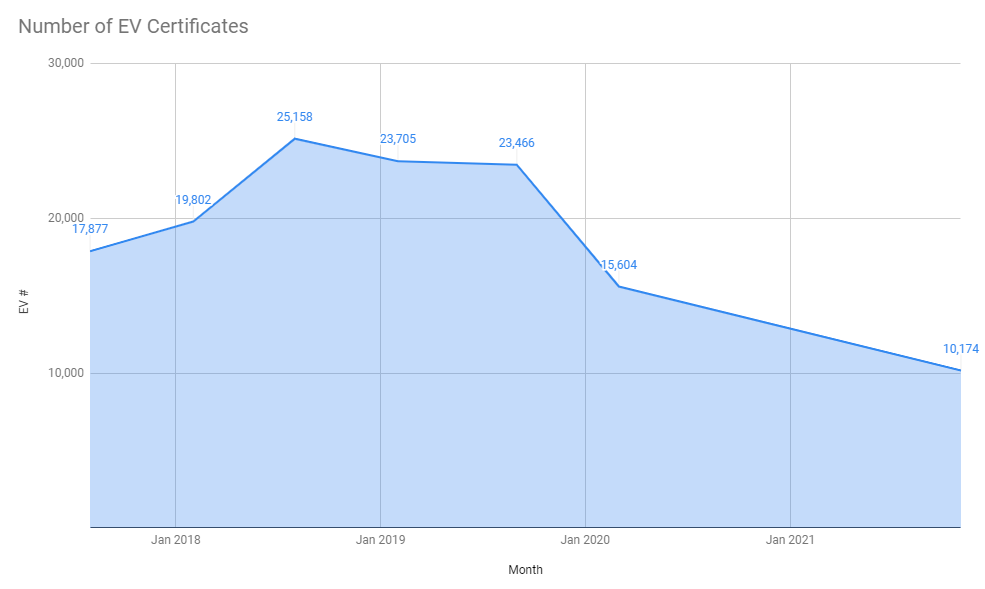

Another continuing trend into this latest report is the decline in the use of EV certificates. Despite the increase in sites using HTTPS, and thus the number of sites requiring certificates increasing, the numbers for EV certificates continue to decline.

We've now got a lower number of sites using EV certificates than at any point in history that I've been doing these reports. After seeing a peak a couple of years ago, the downward trend in the use of EV is now quite stark!

There's only 10,174 sites in the Top 1 Million now using EV certificates and I'd bet, based on the trend in the above graph, that we will see a slightly smaller number in 6 months time too. If you want more information on the trend around EV certificates, check out my posts Gone forEVer!, Sites that used to have EV and Are EV certificates worth the paper they're written on?.

Certificate Authority Authorization

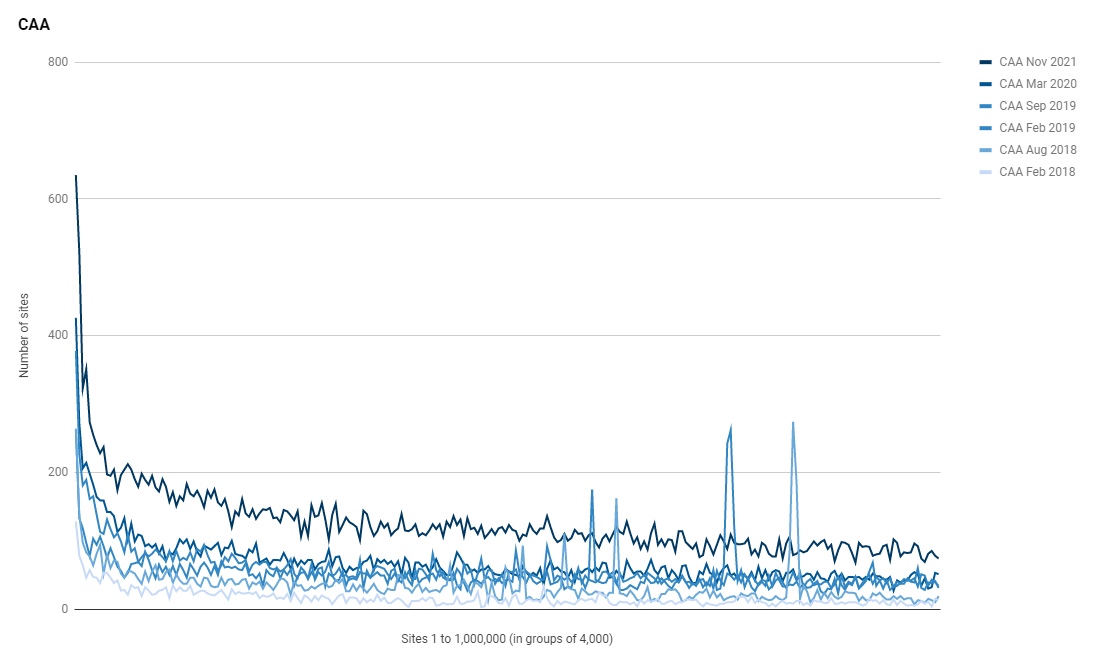

With the growth in the use of certificates as more sites switch to HTTPS, we'd hope for a growth in related technologies like CAA that allow sites operators to control which CAs are allowed to issue certificates for their domain.

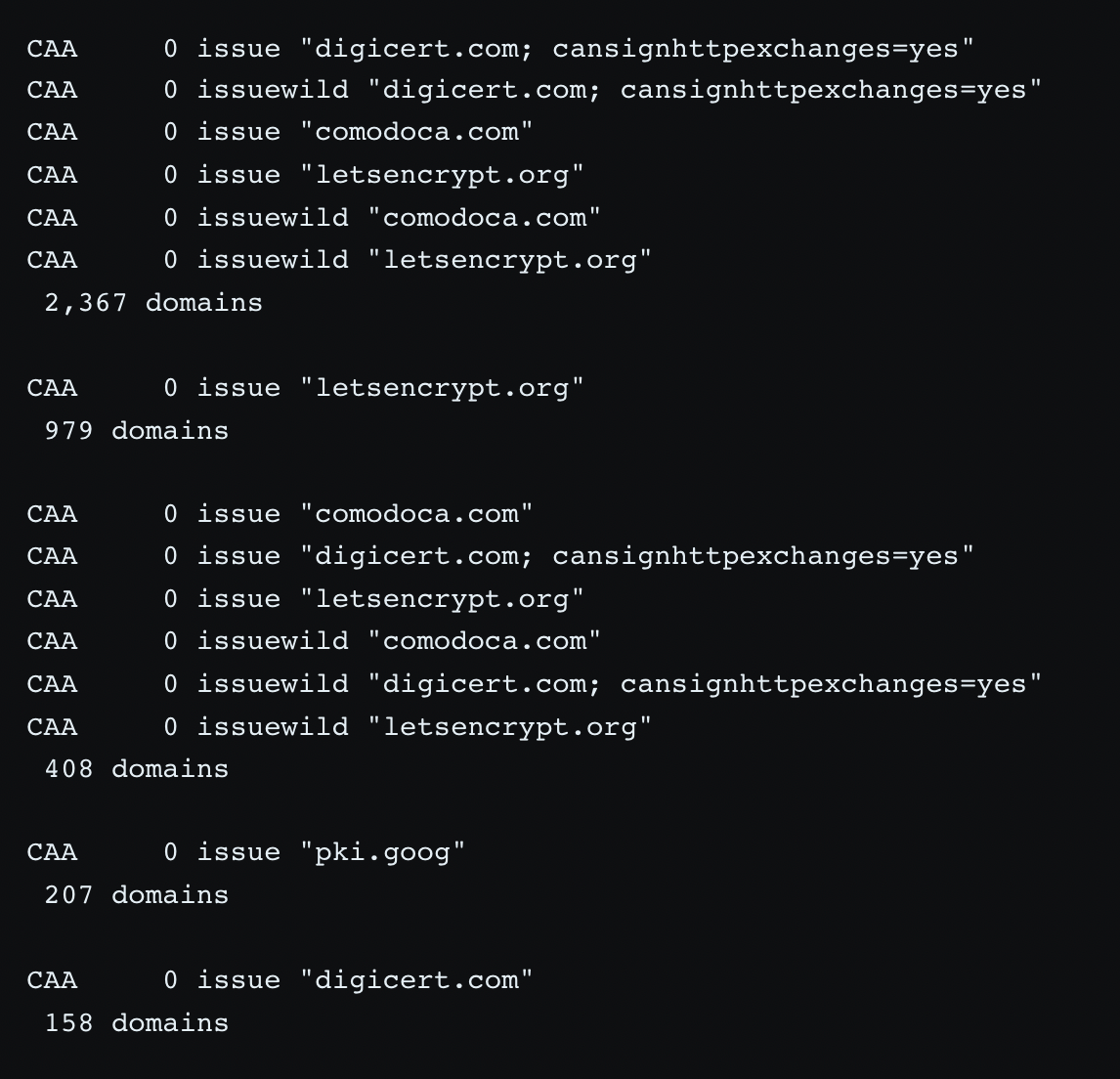

We've gone from 17,246 sites using CAA to a whopping 31,533, quite the nice increase. If you want to see which sites are using CAA you can check the list here which is updated daily and it's not really surprising when you look at the kind of sites using CAA. You can also look at the list of the most common CAA values which is also updated daily and you can see the top 5 most common sets of CAA records and how many sites use them here:

It's unsurprising that letsencrypt.org features so frequently here, being the largest CA in the Top 1 Million, and the next most common values are digicert.com and comodoca.com which are the CAs used by Cloudflare. Looking at the top policy, it look like the default policies set by Cloudflare and possibly the third one too.

TLS, old and new

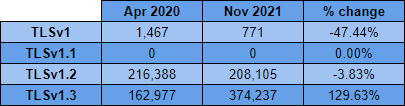

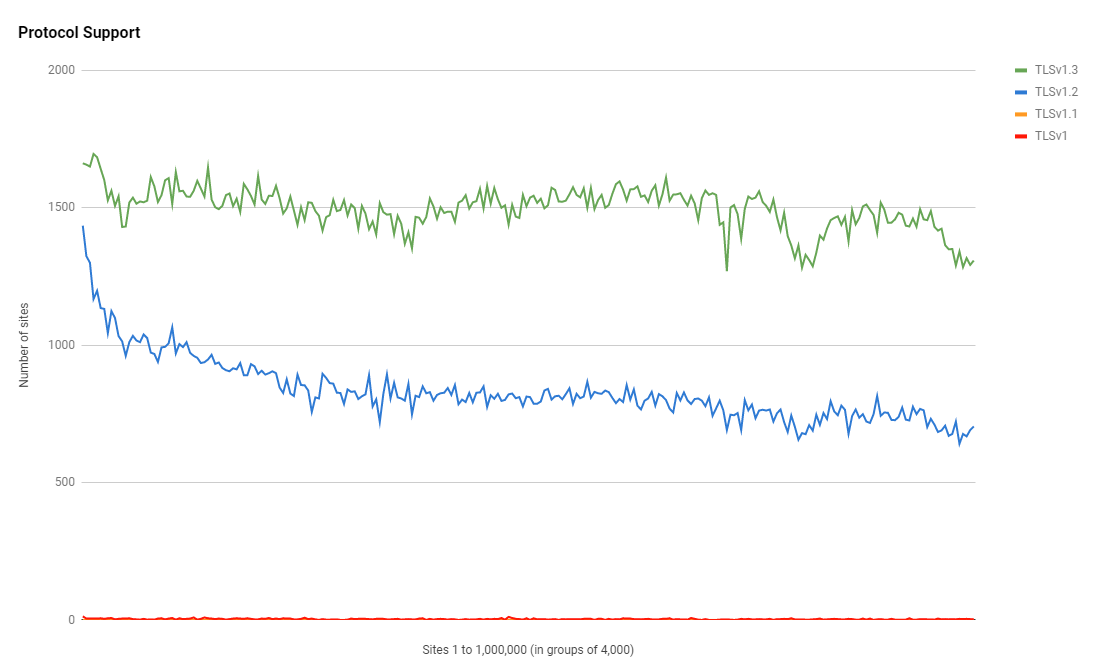

Back in April 2020, as part of my work to keep my crawler up to date with the latest standards, I added support for TLSv1.3 along with a few other improvements so we could see how the support for the latest version of TLS was coming along. I published a 'mini-report' just to detail what the metrics were for those new standards on the first scan so here is the change in the usage of different TLS versions from then until now.

This is awesome to see because since introducing the TLSv1.3 support in the crawler we can see that it started out in second place behind TLSv1.2 but since then it has become the most popular protocol version with more than 50% of the Top 1 Million sites using HTTPS using the latest version of the protocol!

We can see that TLSv1.2 support is still quite popular in the largest sites but even with that preference from the largest sites out there, there is no segment of the Top 1 Million sites where any protocol out performs TLSv1.3 as the most popular protocol.

Another great point here is how well we're doing with keeping TLSv1.1 at zero usage and also reducing the usage of TLSv1 by almost half. Both of these protocol versions are deprecated so if you are using them, it's time to update and move to a minimum of TLSv1.2 instead.

You can see the daily protocol support numbers for each crawl here.

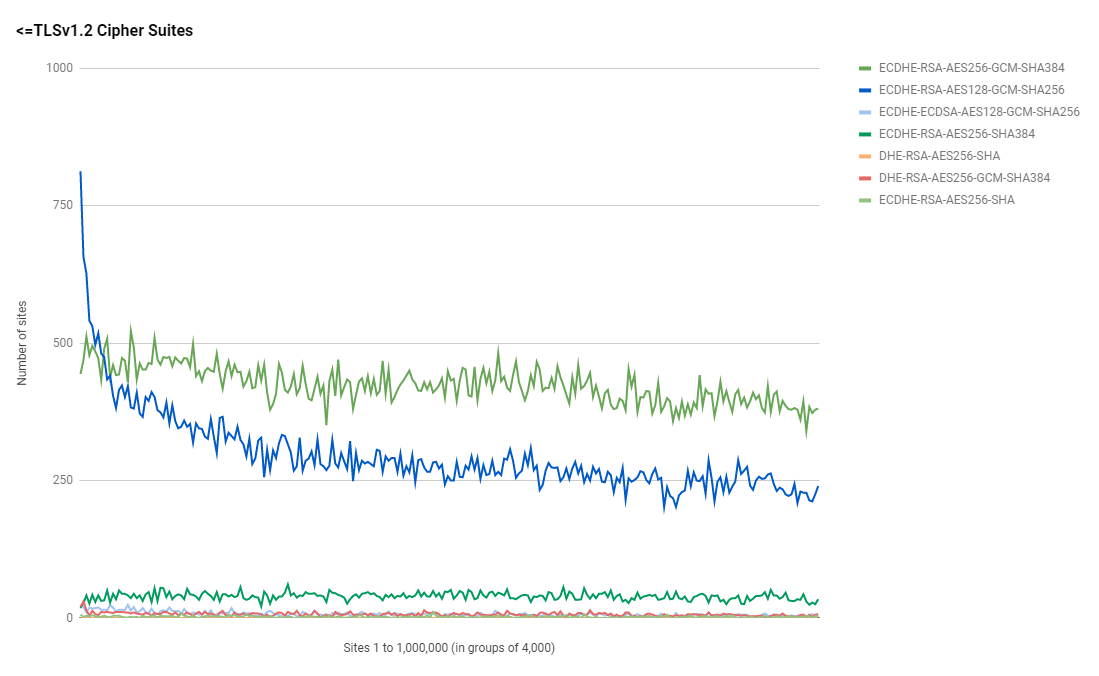

Cipher Suites

Now that we have the new support for TLSv1.3 above, I'm tempted to break out support for cipher suites into <=TLSv1.2 and then analyze TLSv1.3 cipher suites on their own. The daily crawl data for cipher suites shows all of the cipher suites bundled together and here's the entire list because it's small enough to share here.

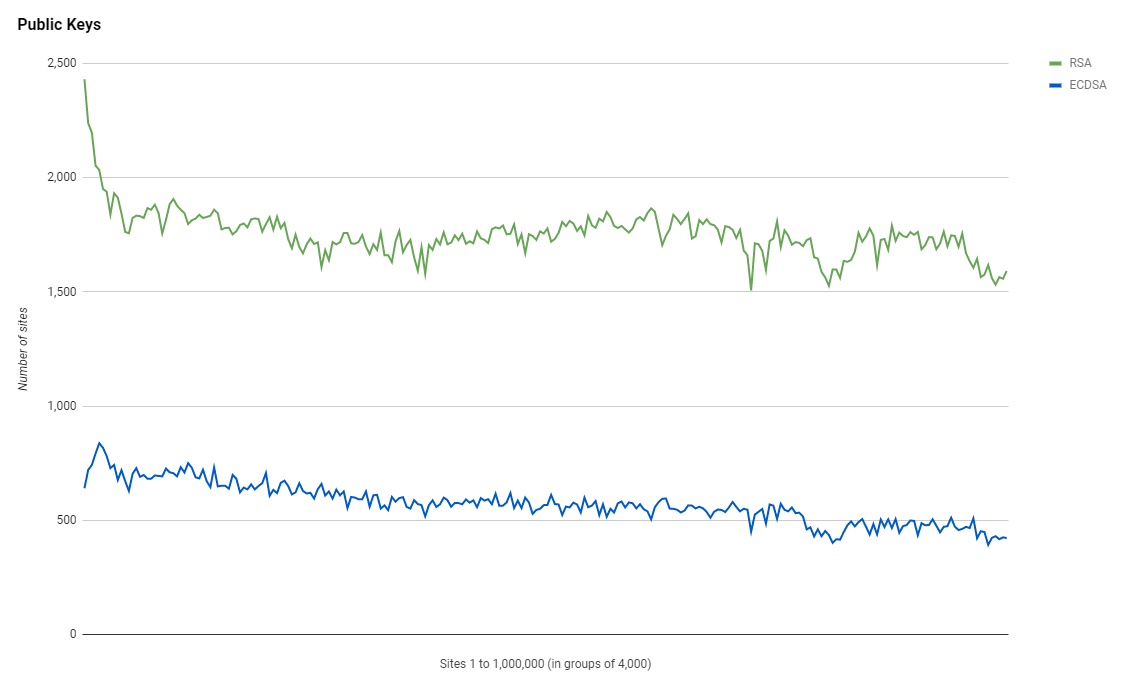

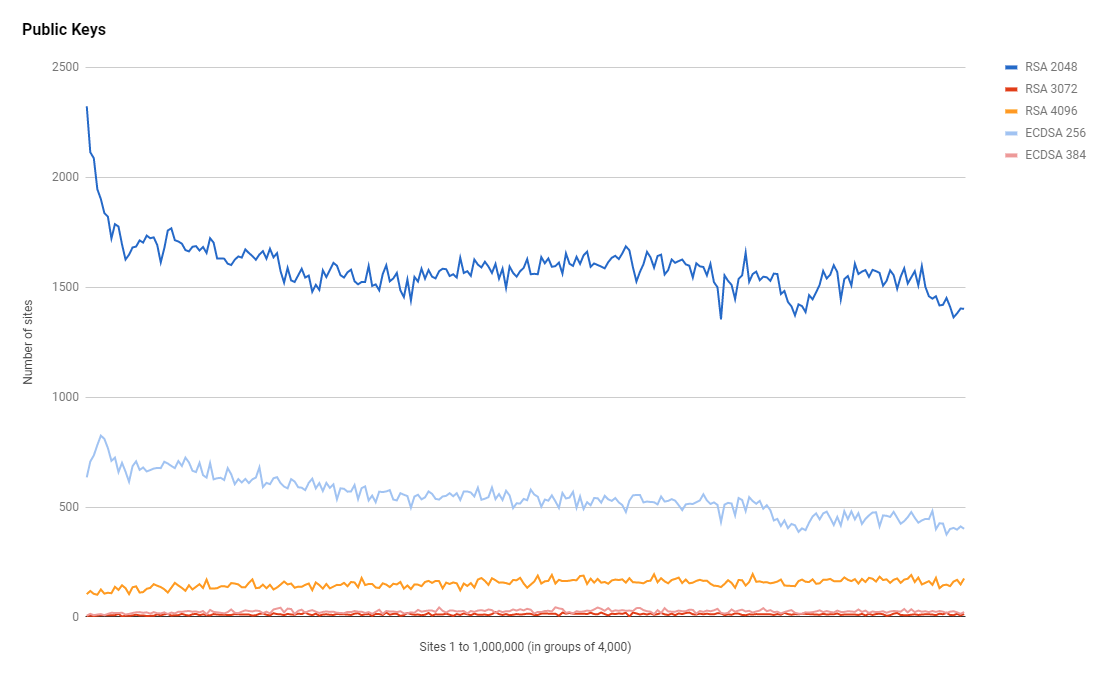

Authentication Keys

One thing that I was hoping to see in this latest report is a swing from using RSA keys for authentication to using ECDSA keys for authentication instead, but sadly that hasn't happened. It seems that RSA is still the preference and by quite some considerable margin.

This is a shame and somewhat surprising as I would have expected that the rise in adoption of TLSv1.3 usage would have driving the ECDSA numbers up much more. One of the main reasons to keep RSA around for authentication is legacy clients that don't support ECDSA yet, but that seems at odds with the huge rise in TLSv1.3 which isn't supported by legacy clients! We also continue to see use of RSA 3072 and RSA 4096 in numbers that are concerning.

If you're using larger RSA keys for security reasons then you should absolutely on ECDSA already which is a stronger key algorithm and offers better performance. My gut feeling here is that there's a lot of legacy stuff out there or site operators just haven't realized the advantages of switching over to ECDSA.

Security Headers

The reason this all started! Looking at the adoption of Security Headers over the years was the main reason this crawler project started. It has since evolved to analyze so much more than just HTTP response headers, but we can't forget the reason this all started.

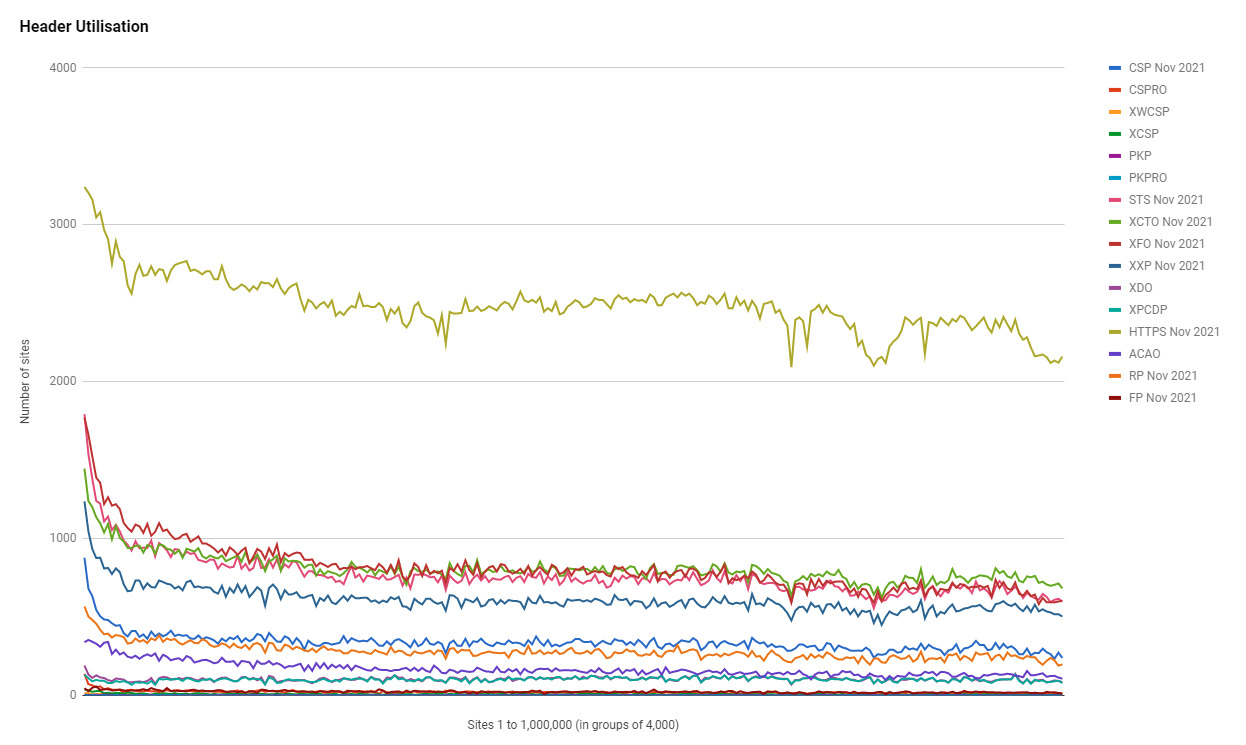

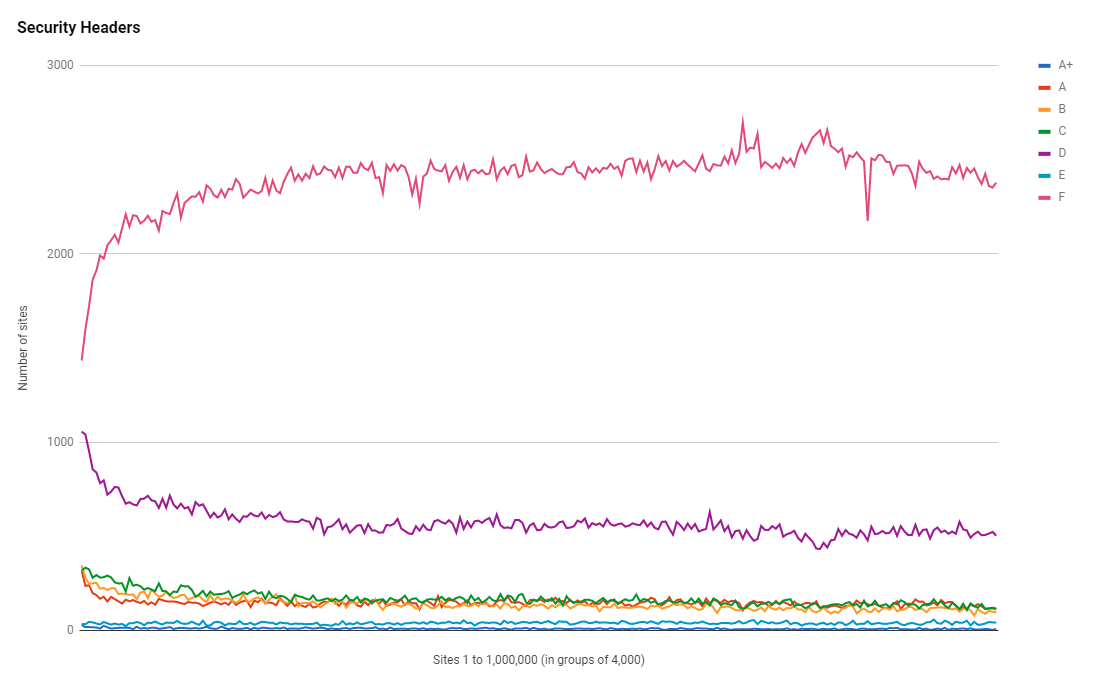

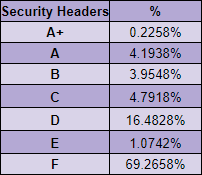

It's awesome to see that month after month, year after year, there's always a continuation in the trend of more security headers being adopted.

The larger sites have always been better at using headers, but still, the increase in usage has been present all the way across the ranking from the most popular site in the Top 1 Million to the least popular. With the increase in the use of security headers comes an increase in the grads that sites are scoring on the Security Headers tool too.

Again we see the more popular sites be more likely to score a higher grade, but across the board, overall, we're doing much better than we were on the last report.

HTTP Versions

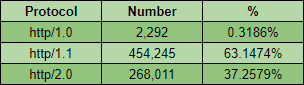

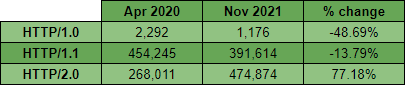

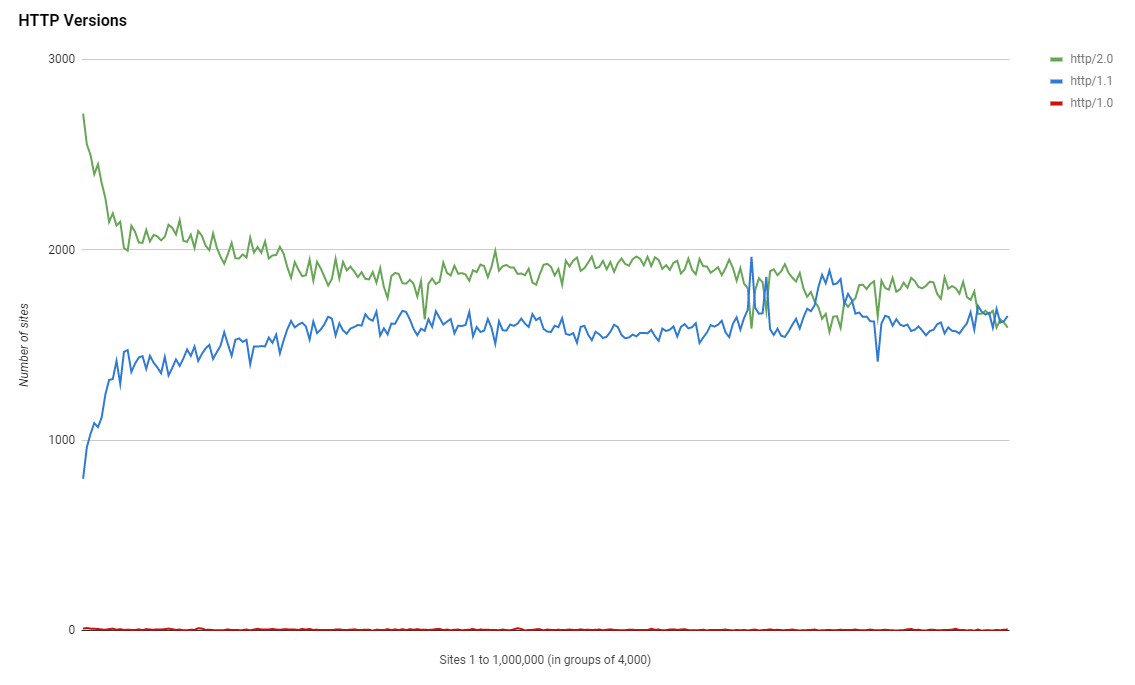

In the April 2020 update I added support for HTTP/2.0 to the crawler so it could detect sites that supported the latest version of HTTP. In that update blog post, I published the numbers for version support as they stood back then.

HTTP/1.1 was still the most dominant protocol with almost double the number of sites supporting it compared to HTTP/2.0 at the time. Fast forward to the present day though and we can see HTTP/2.0 has enjoyed a huge surge in support!

As with other metrics, we see far more adoption of HTTP/2.0 in the more popular sites higher up in the Top 1 Million ranking with the trend lines just crossing before we drop out of the Top 1 Million sites.

Security.txt files

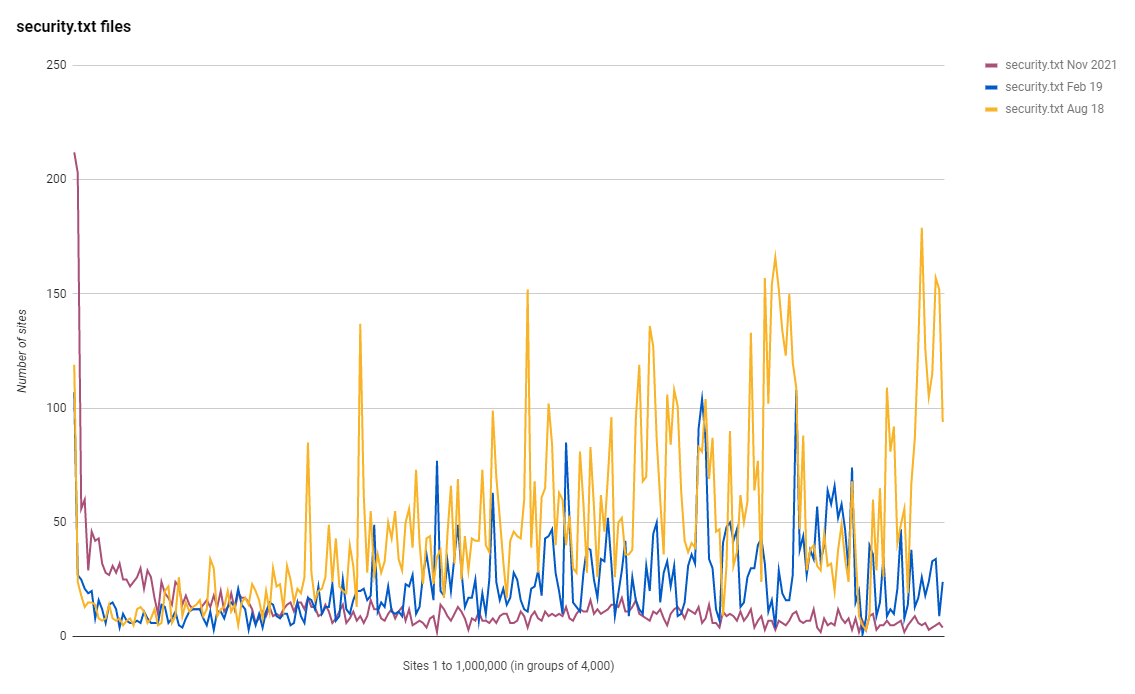

If you aren't familiar with the security.txt file then you should read my linked blog post, but know that they're a good thing to have and we want more sites publishing one. Unfortunately, we've actually seen a reduction in the number of sites publishing a security.txt file!

As you can see, the more popular and higher ranked sites in the Top 1 Million are now publishing a security.txt file in larger numbers, but, overall that hasn't helped as sites lower down the ranking are publishing them in smaller numbers. It's difficult to explain trends like this without further analysis, but that trend line does not look good for Nov 2021.

Other observations

In an already long blog post I'm conscious of going too deep on other areas of the data, but there are some things that I'd like to quickly share and you can read into further if you'd like.

Expired Certificates

As more and more sites continue to use certificates, I've seen an increase in the number of sites having issues with expired certificates. My crawler produces a daily list of sites that were serving an expired certificate at the time of the crawl and wouldn't be available. The crawler also produces a list of sites with certificates that will expire within 1 day, within 3 days and within 7 days, so those are ones to watch!

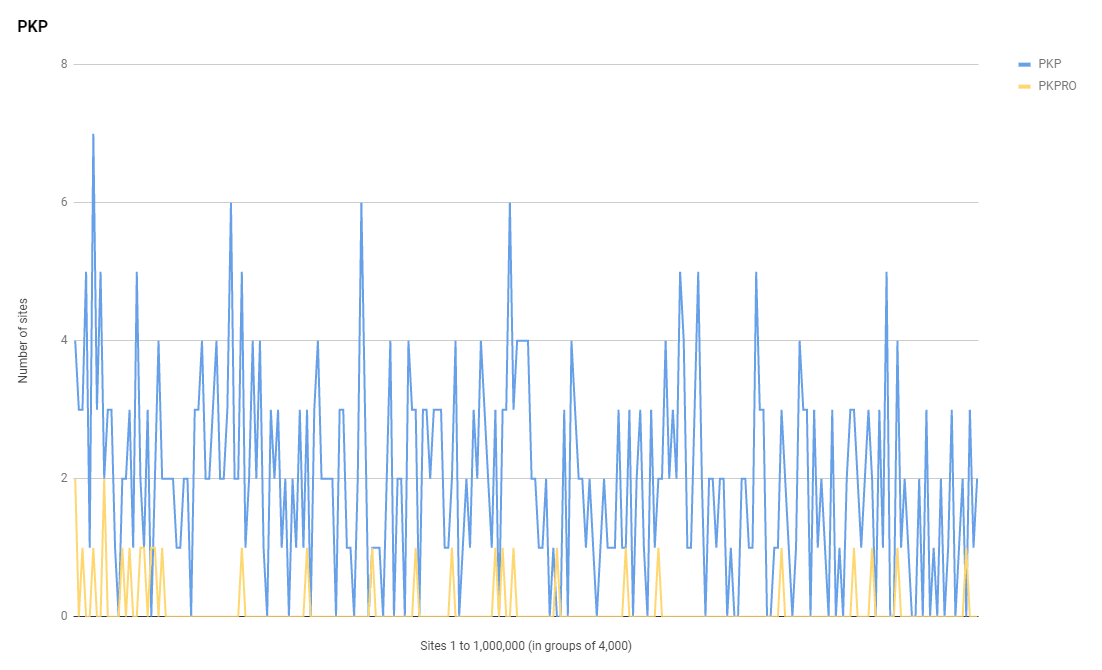

HTTP Public Key Pinning

There are still quite a few sites sending HPKP headers, despite it having been deprecated for quite some time now! The numbers are low, but they're definitely still there.

Here are the lists of sites sending a PKP and PKPRO header.

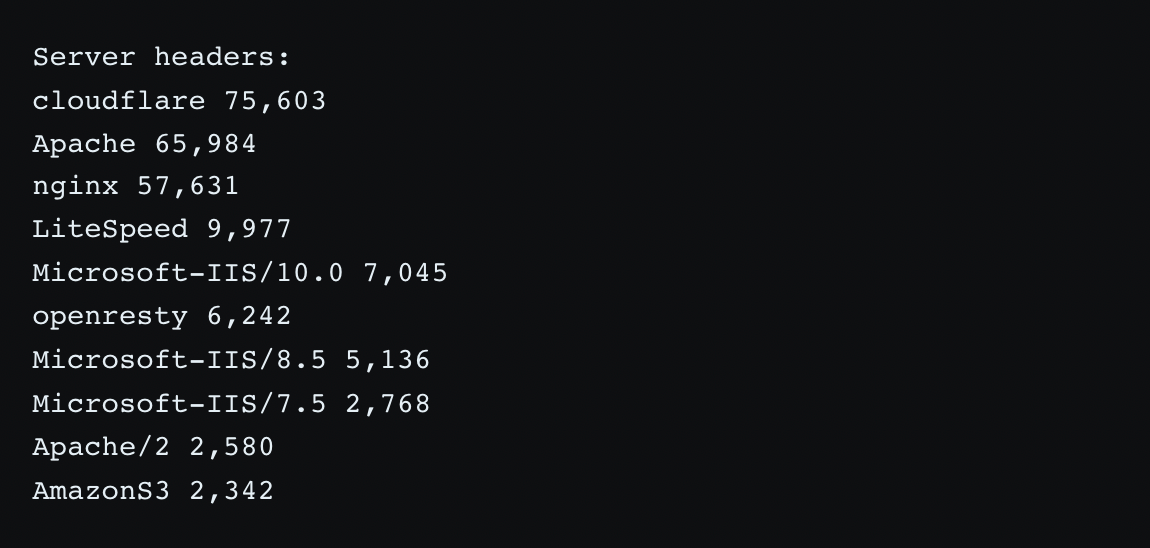

Server headers

Cloudflare have now solidified their position as the most popular value in the Server header. Here are the Top 10 values:

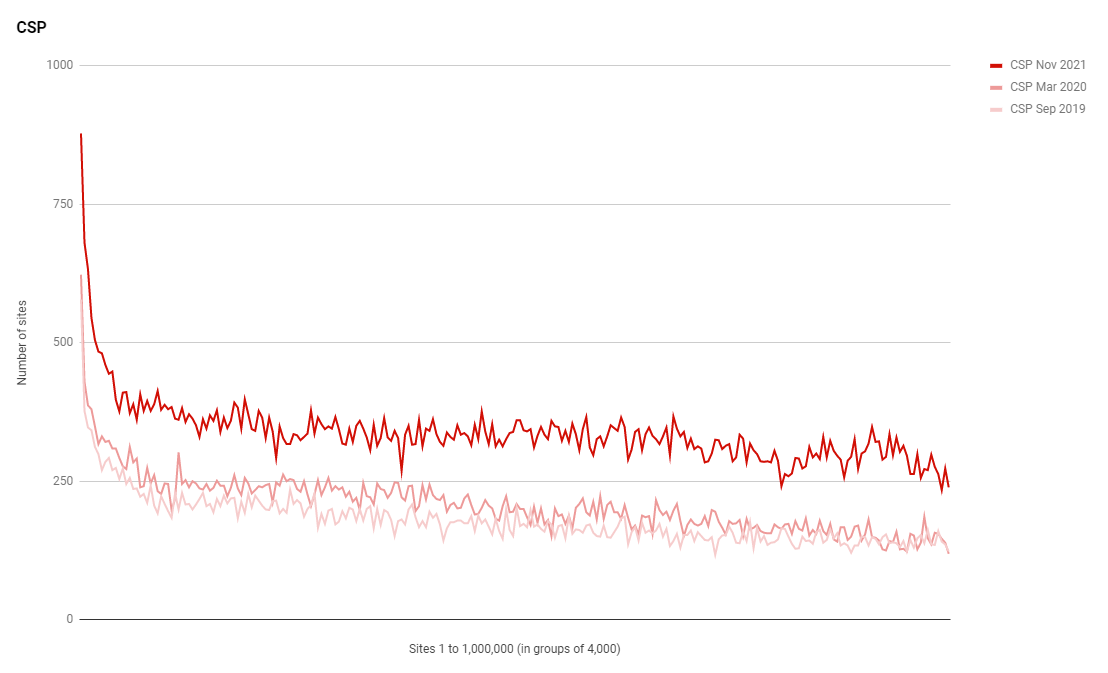

Content Security Policy

A powerful application security feature, CSP allows sites to control what resources are allowed to be loaded on their website and where their pages can send data to. You can read a while collection of blog posts on my site about CSP and it's main purposes of defending against XSS (Cross-Site Scripting) attacks which is possibly what all of the new sites using CSP want!

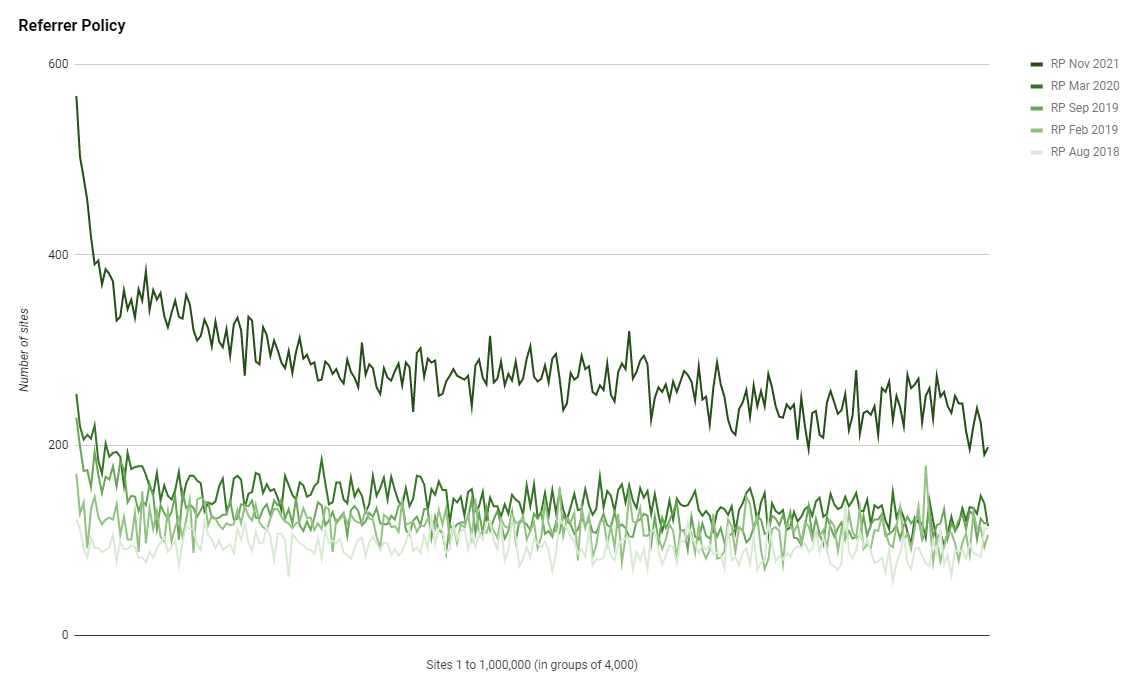

Referrer Policy

A security header that's seen quite a large amount of growth, Referrer Policy now has a substantial amount of usage across the Top 1 Million sites. It's nice to see security features being deployed faster than previous trends would have allowed us to predict.

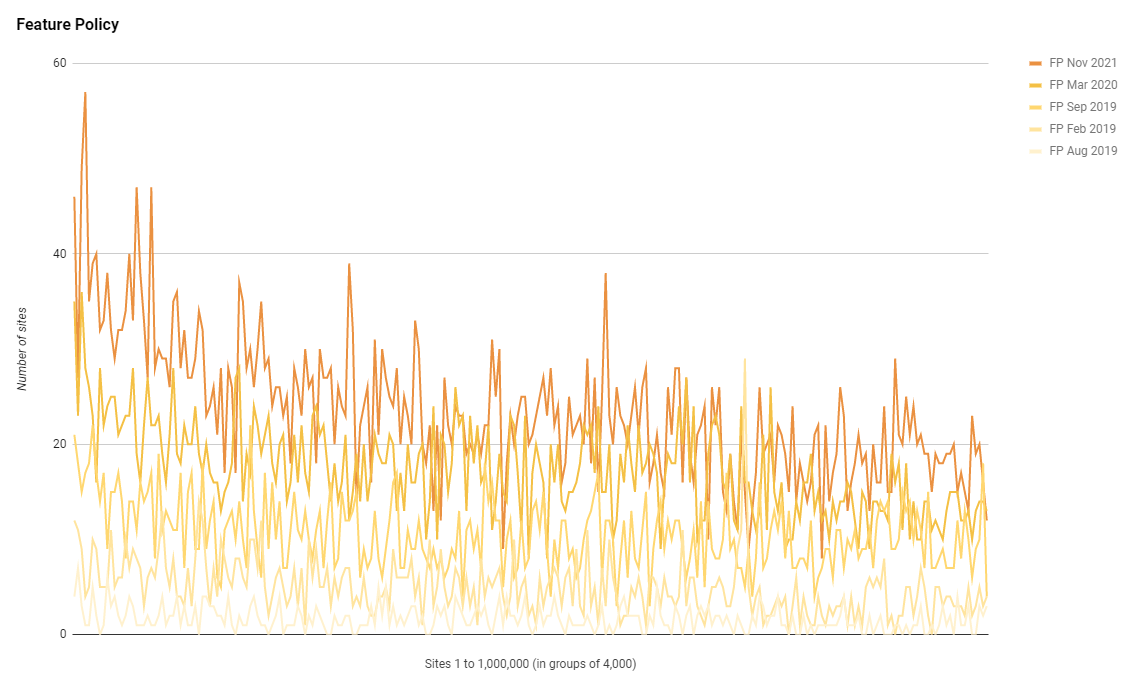

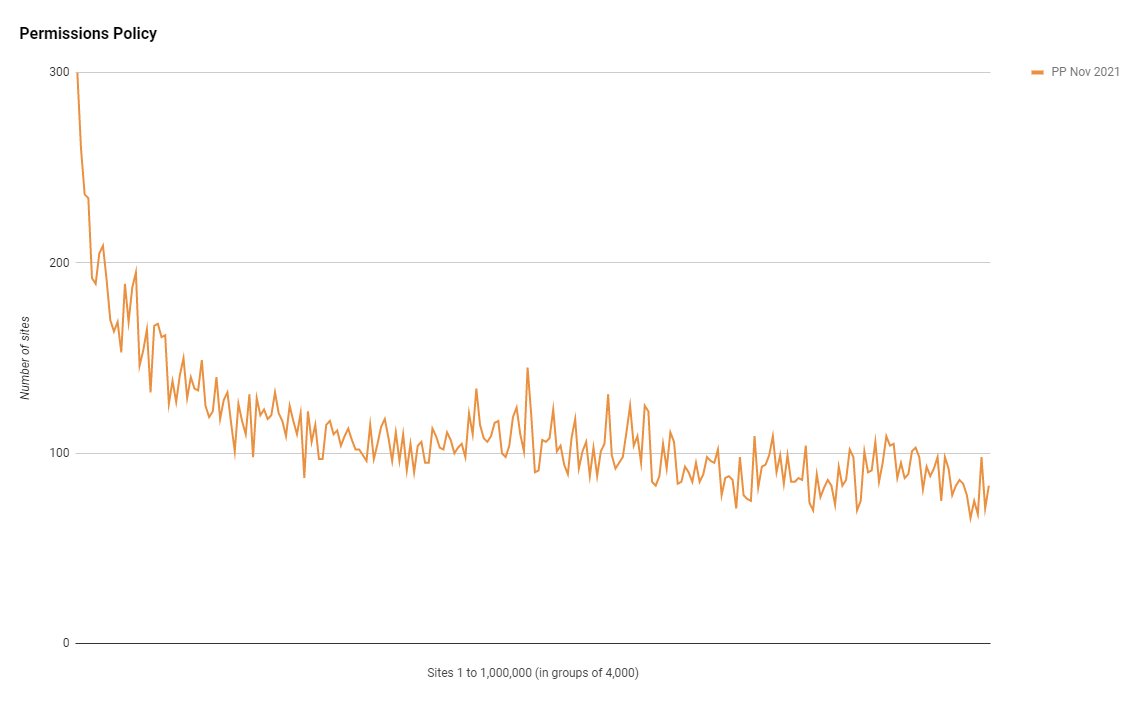

Feature Policy vs. Permission Policy

Feature Policy was deprecated back in Sep 2020 and replaced with Permissions Policy, but despite that, we've still seen a large amount of growth in the use of Feature Policy!

The new header, Permissions Policy, is seeing some growth in usage but as this is the first scan that looks at the use of the header, there is no historic data to compare it to.

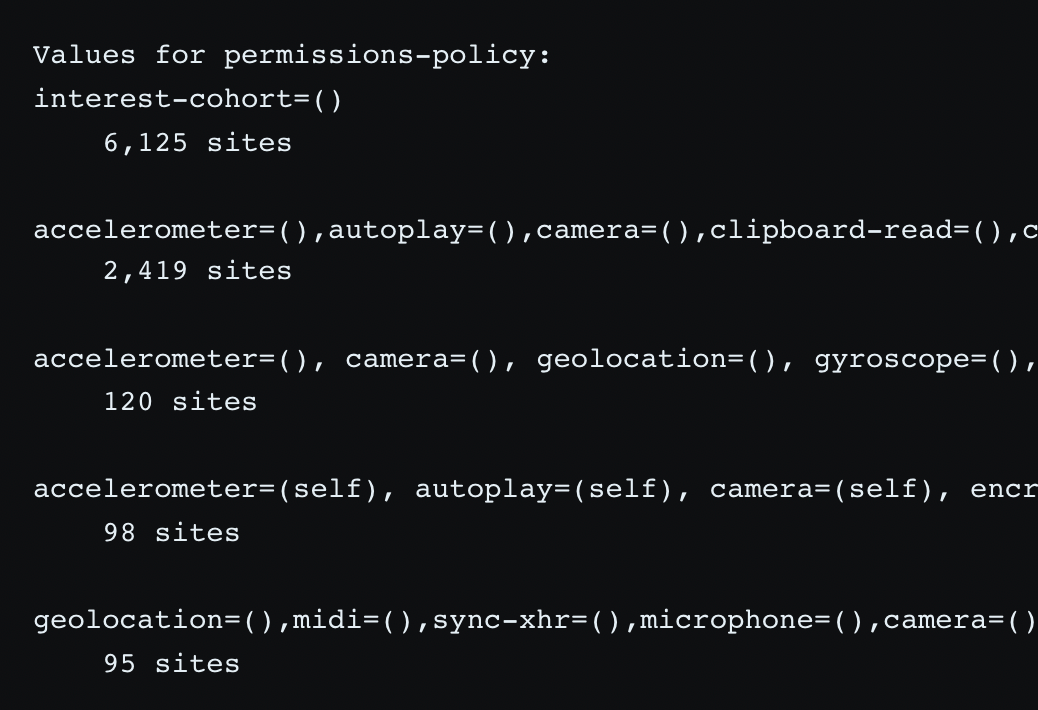

One thing that is helpful though is that a new feature called FloC (Federated Learning of Cohorts) can be enabled via Permissions Policy and if we look at the values of the Permissions Policy headers, we can see that's where the surge in usage is coming from. Here are the five most common policy values in the Permissions Policy header.

The most common value is by far the simplest of just interest-cohort=(), which opts the site in question out of being used in FLoC.

Get the data

If you want to see the data that these scans are based on then there are several things to check out. All of the tables/graphs/data that this report was based on are available on the Google Sheet here. The crawler fleet itself and the daily data is available over on Crawler.Ninja so head over there for those. There's also a full mysqldump of the crawler database with the raw crawl data for every single scan I've ever done, almost 4TB of data, available via the links on the site which means if you want to do some additional analysis the data is there for you to use!

If you just want to have a quick glance through some interesting data, I'd highly recommend the daily crawl summary which allows you to quickly and easily check up on all of the metrics that I discussed above and more.

Onwards to May 2022

As I mentioned right at the start of this post, this report was only possible with the awesome support provided by Venafi. Along with sponsoring this report, they've also sponsored the next report in 2022 so we can look forward to tracking our progress over the next 6 months!