Security is always a concern in applications running on a Kubernetes cluster, especially if sensitive data is involved. At Jetstack Consult, security is our priority, and one of our recommendations given to our clients is to avoid storing sensitive data in Kubernetes Secrets, as anyone with access to the cluster can view them, which is a security risk.

Google Cloud Secret Manager is a secure service that helps you protect your applications and services’ secrets, by storing sensitive data like API keys, passwords, and TLS certificates needed at runtime, or even application configuration you want to inject without storing it in the cluster. This service also enables you to easily rotate, manage, and retrieve the secrets.

However, retrieving these secrets and interacting with applications in a Kubernetes cluster involves deploying extra tooling, such as the Secret Store CSI Driver.

This Kubernetes SIG project allows for your applications to access these secrets stored in different secrets management backends, including Google Cloud Secret Manager. Secret Store CSI Driver for GCP is an open-source provider for retrieving secrets from GCP Secret Manager and mounting them as volumes to Kubernetes Pods. Now, Secret Store CSI Driver for GCP is now available as an add-on for GKE. This is a Google-managed and officially supported version of the open-source project and it can be enabled on both Standard and Autopilot clusters.

Some of the benefits of it:

- Fully managed and supported solution to access Secret Manager secrets from within GKE without any operational overhead.

- No custom code to access secrets stored in Secret Manager.

- Storing and managing all your secrets centrally in Secret Manager and selectively accessing secrets from GKE pods using the Secret Manager add-on.

However, as a beta service, it has some limitations and it doesn't yet support Secret auto rotation and Sync as Kubernetes Secret available in the Secrets Store CSI Driver.

Note that If you're using the open-source Secrets Store CSI Driver to access secrets, you can migrate to the Secret Manager add-on, however, you should be aware of the limitations mentioned above.

In this blog post, we are going to look at how to set up and use the Secret Manager add-on for a Standard GKE cluster with a Prometheus and Alertmanager monitoring stack, where Alertmanager handles alerts sent by the Prometheus server and takes care of routing them to PagerDuty as receiver integration for notifications. This example also involves setting up the necessary IAM permissions to authenticate to the Secret Manager API, installing the Secret Manager add-on, and how to consume a secret from Secret Manager needed by Alertmanager to authenticate to PagerDuty.

Enable Secret Manager add-on

Firstly, let’s create a GKE Standard cluster with the Secret Manager addon enabled by the gcloud command line.

Note: Secret Manager addon for GKE can’t currently be enabled by Terraform.

gcloud beta container clusters create fleetops \

--enable-secret-manager \

--location=europe-west2-a \

--cluster-version=1.29 \

--workload-pool=jetstack-maria.svc.id.goog

## Retrieve the GKE cluster credentials

gcloud container clusters get-credentials $CLUSTER_NAMEOnce the cluster is deployed with the Secret Manager add-on enabled, we can now use the Secrets Store CSI Driver in Kubernetes volumes using the driver and provisioner name: secrets-store-gke.csi.k8s.io.

As mentioned before we’re deploying Prometheus/Alertmanager with PagerDuty integrated as an example. This integration brings great advantages, improving the efficiency and effectiveness of incident response within any Kubernetes Platform. Prometheus sends events to PagerDuty via a Prometheus Alertmanager configuration file, where an integration_key is set as part of the Alertmanager config yaml file. To get the PagerDuty integration_key for Prometheus integration follow the documentation.

Setup Alertmanager configuration

Create the Alertmanager config file (alertmanager.yml) using the PagerDuty Integration Key as the routing_key value.

global:

resolve_timeout: 5m

http_config:

tls_config:

insecure_skip_verify: true

route:

group_by: ['job']

group_wait: 30s

group_interval: 1m

repeat_interval: 2m

receiver: 'pagerduty'

receivers:

- name: 'pagerduty'

pagerduty_configs:

- routing_key: 'R03E1UDKLXRGYxxxxxxxxxxxx'Typically the alertmanager.yml file is mounted in the Alermanager pod as ConfigMap, but to avoid sharing our PagerDuty key, let’s create a Secret in GCP Secret Manager with the config file.

In order to create a secret in Secret Manager, grant your google cloud user account the role roles/secretmanager.admin to be able to create and manage secrets. Create the secret alertmanager-config as follows:

SECRET_NAME="alertmanager-config"

gcloud secrets create $SECRET_NAME \

--replication-policy="automatic"Add a version to the secret with the alertmanager.yml file:

FILE_NAME="alertmanager/alertmanager.yml"

gcloud secrets versions add $SECRET_NAME --data-file=$FILE_NAME

Grant Permissions

The Google Secret Manager provider uses the workload identity of the Pod that a secret is mounted onto when authenticating to the Secret Manager API. See more about how to use Kubernetes Service Accounts and Workload Identity Federation in this blog.

Create a Kubernetes ServiceAccount in the monitoring namespace:

apiVersion: v1

kind: ServiceAccount

metadata:

name: alertmanager-secret-sa

namespace: monitoringGrant alertmanager-secret-sa Kubernetes ServiceAccount permission to access the secret:

gcloud projects add-iam-policy-binding jetstack-maria --role=roles/secretmanager.secretAccessor \

--member=principal://iam.googleapis.com/projects/1234567890/locations/global/workloadIdentityPools/<PROJECT_ID>.svc.id.goog/subject/ns/monitoring/sa/alertmanager-secret-sa \

--condition=None

Describe which files to create

To specify which secrets to mount as files in the Kubernetes Pod, we have to create a SecretProviderClass, listing the alertmanager-config secret and the filename to mount them as volume.

apiVersion: secrets-store.csi.x-k8s.io/v1

kind: SecretProviderClass

metadata:

name: alertmanager-config-secret

namespace: monitoring

spec:

provider: gke

parameters:

secrets: |

- resourceName: "projects/jetstack-maria/secrets/alertmanager-config/versions/latest"

path: "alertmanager.yml"

Deploy Prometheus and Alertmanager

To quickly deploy and set up Prometheus and to see the manifests used in this blog post, you can visit and clone the repo: https://github.com/maria-reynoso/fleetops-gke-secret-manager-addon/tree/main

Deploy Prometheus:

make deploy_prometheusYou can use kubectl port-forward to access the Prometheus console by using url: http://localhost:8080 to ensure all is working.

Let’s now deploy Alertmanager and mount the volume referencing the SecretProviderClass object we created earlier which acts as the provider. The volume config at /etc/config is mounted using the CSI driver (secrets-store-gke.csi.k8s.io).

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: monitoring

...

spec:

serviceAccountName: alertmanager-secret-sa

containers:

- name: prometheus-alertmanager

image: prom/alertmanager

imagePullPolicy: Always

...

volumeMounts:

- name: config

mountPath: /etc/config

- name: alertmanager-local-data

mountPath: "/data"

subPath: ""

volumes:

- name: config

csi:

driver: secrets-store-gke.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: alertmanager-secrets

- name: alertmanager-local-data

emptyDir: {}Create services for alertmanager, one service with GCP External load balancer:

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: monitoring

labels:

k8s-app: alertmanager

spec:

ports:

- name: http

port: 9093

protocol: TCP

targetPort: 9093

selector:

k8s-app: alertmanager

type: "LoadBalancer"

---

apiVersion: v1

kind: Service

metadata:

name: alertmanager-operated

namespace: monitoring

labels:

k8s-app: alertmanager

spec:

type: "ClusterIP"

clusterIP: None

selector:

k8s-app: alertmanager

ports:

- name: mesh

port: 6783

protocol: TCP

targetPort: 6783

- name: http

port: 9093

protocol: TCP

targetPort: 9093Visit Alertmanager console by using the External IP:

kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

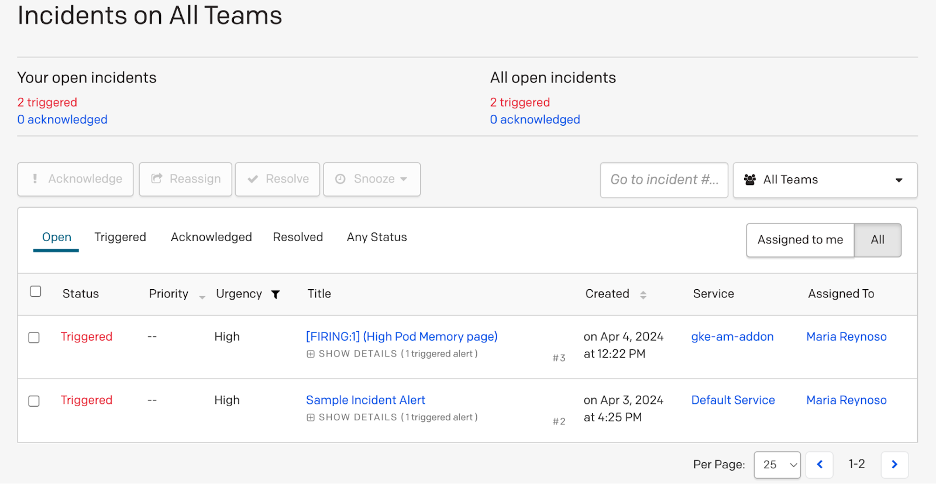

alertmanager LoadBalancer 10.100.211.35 34.xxxxxxx.234 9093:31083/TCP 2hWhen Prometheus starts firing alerts, these will be received by Alertmanager and sent to PagerDuty:

This integration of Google Cloud Secret Manager and Secret Store CSI Driver provides you with a centralised solution to improve your Secrets management.

At Jetstack Consult we’re often helping customers adopt and mature their cloud-native and Kubernetes offerings. If you’re interested in discussing how GKE and platform engineering can help your organisation, get in touch and see how we can work together.