Confidentiality and integrity, alongside availability, form the foundational pillars of all cybersecurity initiatives. In the context of these critical principles, two technologies often mentioned are hashing and encryption. Although they may appear similar, they serve distinct purposes. Hashing is primarily concerned with maintaining the integrity of information, whereas encryption is designed to ensure the confidentiality of data.

Differences Between Hashing and Encryption

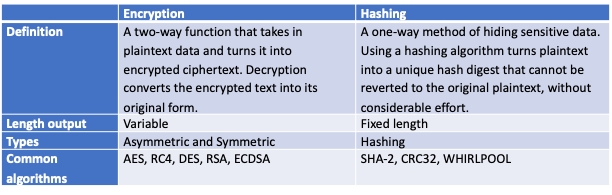

Hashing and encryption are fundamental techniques in data security with distinct functions. Encryption is a two-way process where data is encoded to protect privacy, allowing it to be decrypted back into its original form. Hashing, in contrast, is a one-way process that converts data into a fixed-size hash value, which is virtually irreversible. While encryption secures data for authorized access, hashing ensures data integrity by creating unique hash codes that verify data authenticity without revealing the original data.

Although technically possible, the resources needed to reverse a hash function are so significant that it's considered impractical. Both mechanisms play essential roles in data security, ensuring privacy and integrity in today's digital age.

The following table provides a comparison of the two fundamental security techniques.

When to use hashing

Using a hash function, hashing is the act of transforming any data of arbitrary size to a fixed-length result. This value is referred to as a hash value, hash code, digest, checksum, or simply hash.

Computers use hashing in two primary areas:

- To determine the integrity of a file or message during transmission over an open network. For example, Alice can send Bob a file together with the hash value and the original message. Bob can then calculate the received file's hash value. A match between the two hash values tells Bob that the file integrity is intact.

- A second application of hashing is hash tables. A hash table is a data structure that stores data using the hash value as the table index and the original data as the value.

Common hashing algorithms

Hashing algorithms are used to authenticate data and ensure that the data maintains integrity. By converting a data string into a numeric string output of fixed length, the hashing algorithm can verify that the data has not been tampered with, modified, or corrupted.

The following are the most used hashing algorithms:

Message Digest Algorithm (MD5)

After discovering major security flaws in MD4, MD5 was developed as an improved version of MD4. MD5 produces 128-bit outputs for inputs of varied lengths. As the successor to MD4, it addressed a variety of security risks but was unable to offer comprehensive data security services. Even though MD5 is widely utilized, its fragility and collisions are the main concerns highlighted about its use.

Secure Hashing Algorithm (SHA-1, SHA-2, SHA-3)

The Secure Hashing Algorithm (SHA) is a modified version of MD5, created by the NSA and used to hash data and certificates. When addressing SHA forms, multiple distinct SHA types are mentioned. SHA-1, SHA-2, SHA-256, SHA-512, SHA-224, and SHA-384 are examples of SHA names.

SHA-1 was the first secure hashing algorithm and generated 160-bit hash digests. The other bigger values, such as SHA-256, are simply SHA-2 variations with different bit lengths. SHA-2 can generate a range of bit widths from 256 to 512 bits, allowing it to generate hash digests with entirely unique values.

Since 2005, experts have recognized that the initial SHA-1 certificate was susceptible to assault. In 2011, the NIST (National Institute of Standards and Technology) officially deprecated SHA-1 in response to growing concerns. Google and the Dutch research institute CWI revealed in February 2017 that they have successfully cracked SHA-1 in practice using a simulated collision attack. This discovery highlights the risks of SHA-1 and the urgent need for websites to transition to SHA-2 or SHA-3 immediately.

WHIRLPOOL

Vincent Rijmen and Paul Barreto devised the WHIRLPOOL algorithm, which evaluates any message shorter than 2256 bits and provides a 512-bit message digest in return. Whirlpool-0 is the name of the initial version, Whirlpool-T is the name of the second version, and Whirlpool is the name of the most recent version.

Even if there are no documented security flaws in earlier versions of Whirlpool, the most recent revision has improved hardware implementation efficiency and is likely more secure. Whirlpool is the version adopted by the international standard ISO/IEC 10118-3.

TIGER

Tiger cipher method was developed by Ross Anderson and Eli Biham for 64-bit platforms in 1995. In comparison to the MD5 and SHA families, it is a more efficient and faster algorithm. It has a 192-bit hashing scheme and may also be used on 32-bit systems, but at a reduced rate. Tiger2 is a superior variant of this algorithm. Tiger has no patents or usage restrictions.

TLS Machine Identity Management for Dummies

Encryption

Encryption is the technique of encrypting plaintext and other data that can only be viewed by the authorized party with the decryption key. It will prevent fraudsters from accessing your sensitive info. It is the most efficient method for securing data in modern communication systems. For the recipient to be able to read an encrypted message, they must possess a decryption password or security key. Unencrypted data is referred to as plain text, while encrypted data is referred to as cipher text.

When to encrypt data

The primary purpose of encryption is to prevent unauthorized individuals from reading or obtaining information from a message that was not meant for them. Encryption increases the security of message transmission over the Internet or any other network.

Encrypting data serves primarily to safeguard confidentiality while data is either at rest – stored on a computer system or in the cloud – or in transit – transmitted over a network. Authentication, data integrity, and non-repudiation are three of the most important security aspects provided by modern data encryption techniques.

The authentication feature enables the origin of a message to be verified. The integrity function assures that the content of a message has not been altered since it was sent. In addition, non-repudiation ensures that the sender of a message cannot deny delivering it. Because of these features, encryption is a key requirement across all security and privacy regulations and legislation, including HIPAA, PCI DSS, GDPR, and others.

Encoding vs encryption

Encryption and encoding are frequently interchanged and wrongly used terminology. Encoding is the process of transforming data into a format that may be used by a variety of system types. If a person knows the algorithm used to encode the data, it is simple to decode the data into a readable format. Unlike encryption, it does not require a key to decode the information. The most common encoding algorithms are ASCII, UNICODE, URL Encoding, and Base64.

Encoding prioritizes data accessibility over confidentiality, which is the primary objective of encryption. The primary purpose of encoding is to convert data so that it can be used by a different sort of system. It is not used to protect data since, unlike encryption, it is simple to reverse.

Challenges of encryption

Although encryption algorithms offer protection for the data, it is frequently the target of several attacks. The mishandling of related cryptographic keys is a primary cause of these attacks. Attackers are infiltrating systems and networks using stolen or compromised keys to intercept authorized communications and steal sensitive data.

With the potential of quantum computing and the threat to current encryption techniques, a large number of criminals are compromising and storing encrypted data for future use.

Side-channel is a prominent attack method. This form of attack targets implementation characteristics, such as power usage, rather than the encryption itself. If there is a flaw in system design or implementation, these assaults are likely to succeed.

How does encryption work?

During the data encryption process, pertinent data is encrypted using an encryption algorithm and an encryption key. This procedure yields ciphertext, which can only be viewed in its original form when decoded using the proper key. There are two primary types of encryptions based on the key type: symmetric encryption and asymmetric encryption.

Symmetric encryption

Symmetric encryption utilizes a single secret key for both encrypting and decrypting data. Its main benefit is its speed, being significantly quicker than asymmetric encryption. However, a drawback is the need for the sender to share the encryption key with the recipient for message decryption. To mitigate the challenge of securely exchanging the secret key, organizations have adopted a hybrid approach: using asymmetric encryption to exchange the secret session key and then employing symmetric encryption for data encryption.

Encryption algorithms by type

Symmetric encryption algorithms

The most common symmetric encryption algorithms are the following:

Advanced Encryption Standard (AES)

AES is a symmetric encryption that encrypts 128 bits of data in one go. The key used to decrypt the data can have various sizes, such as 128 bits, 192 bits, or 256 bits. The 128-bit key encrypts data in 10 rounds, the 192-bit key in 12 steps, and the 256-bit key in 14 steps. AES has demonstrated its efficiency and dependability over the past few years. Numerous companies employ this method of encryption for both stored data and information exchanged between communication parties.

Blowfish

Blowfish, a symmetric cipher with a variable-length key and a 64-bit block size, was developed by Bruce Schneier in 1993. Intended as a "general-purpose algorithm," it aimed to offer a rapid and free substitute for the outdated Data Encryption Standard (DES). While Blowfish outpaces DES in terms of speed, its limited block size has been deemed insecure, preventing it from fully supplanting DES.

Twofish

Twofish, an evolution of Blowfish, enhances security by incorporating a larger 128-bit block size. Optimized for 32-bit CPUs, this encryption algorithm is well-suited for both hardware and software applications. As an open-source, unpatented, and license-free tool, Twofish is readily available for use. It also introduces sophisticated features aimed at supplanting the Data Encryption Standard (DES) algorithm.

Rivest Cipher (RC4)

Ron Rivest developed Rivest Cipher 4 (RC4) for RSA Security in 1987, a stream cipher that encrypts data byte-by-byte. As one of the most widely-used stream ciphers, RC4 was integral to SSL/TLS protocols, the IEEE 802.11 wireless LAN standard, and the Wi-Fi Security Protocol WEP (Wireless Equivalent Protocol). Its popularity stemmed from its ease of use and fast performance. However, due to substantial vulnerabilities, RC4 has seen a decline in usage.

Data Encryption Standard (DES)

The Data Encryption Standard (DES) is a symmetric key algorithm used for encrypting digital information. While its 56-bit key is now too short for modern security needs, DES has been influential in cryptographic development. To enhance security, variations like double DES and triple DES (3DES) were introduced, using longer keys of 112 bits and 168 bits, respectively, for greater security. In 3DES, the DES algorithm is run three times with three keys and is deemed secure when each key is unique. Following public review, standards dictate that 3DES will be phased out for new applications, with a complete discontinuation planned after 2023.

Asymmetric encryption

This type of encryption is also known as public-key cryptography. This is because the encryption process uses two distinct keys, one public and one private. The public key, as its name suggests, may be shared with anyone, whereas the private key must be kept confidential.

Asymmetric encryption algorithms

The most common asymmetric encryption algorithms are the following:

Elliptic Curve Digital Signature Algorithm (ECDSA)

ECDSA, or Elliptic Curve Digital Signature Algorithm, is one of the more complex encryption algorithms for public key cryptography. Elliptic curve cryptography generates keys that are smaller than the average keys generated by digital signature algorithms. ECDSA uses the algebraic structure of elliptic curves over finite fields. The primary applications of elliptic curve cryptography include the generation of pseudo-random numbers, digital signatures, and more. ECDSA performs the same function as other digital signatures, but more effectively. This is because ECDSA uses smaller keys to achieve the same level of security as other digital signature algorithms.

Rivest-Shamir-Adleman (RSA)

The Rivest-Shamir-Adleman (RSA) algorithm, a prevalent form of asymmetric encryption, is employed in numerous products and services. RSA's core principle hinges on the ease of multiplying two large prime numbers to create a larger number, contrasted with the significant challenge of factoring this product back into its original primes. The public and private keys in RSA are derived from the product of two large prime numbers, calculated using these same primes. RSA keys typically have lengths of 1024 or 2048 bits, which makes them very resistant to factorization. However, there is a growing belief that 1024-bit keys may soon become vulnerable to breaking.

Diffie-Hellman Key Exchange

The Diffie-Hellman key exchange, alternatively referred to as exponential key exchange, is a method in digital encryption where decryption keys are created through the exponentiation of numbers. This technique hinges on the use of values that are not entirely transmitted, creating a complex mathematical challenge for anyone attempting to decrypt the code. It allows two parties to create a shared secret, useful for the secure transfer of data across a public network. The process leverages public-key cryptography to enable the exchange of a private encryption key, thereby safeguarding the communication channel.

Pretty Good Privacy (PGP)

PGP, short for Pretty Good Privacy, was initially developed as a robust program for encrypting and decrypting emails over the internet. It also provided the capability to authenticate messages through digital signatures and to encrypt files, ensuring enhanced security in digital communications. Over time, PGP has grown beyond its original application. It is now broadly used to describe any encryption software or application that follows the OpenPGP public key cryptography standard, which emerged from PGP's original specifications. This standard allows users to securely exchange data and verify the authenticity of that data through a system of public and private keys, offering a high level of security in digital communications and file protection.

Managing Machine Identities

In today's digital enterprise environments, every piece of equipment, ranging from computers and mobile devices to servers and network infrastructure, possesses a unique machine identity. The growing volume of machine interactions in digital processes, if not properly managed for authentication, presents a substantial risk to a business's continuity. This is where encryption and hashing come into play. By utilizing cryptographic keys and digital certificates, these systems are able to authenticate and verify the legitimacy of each interaction.

For machines to communicate securely with one another, they need to be properly authenticated. A machine identity goes beyond being just a digital ID number or basic identifiers like serial or part numbers. It encompasses a set of verified credentials that establish a system's or user's access rights to online services or networks. Unlike humans, computers cannot use usernames and passwords. Instead, they use credentials designed for highly automated and interconnected environments. These credentials typically involve the use of digital certificates and keys to assert the identity of the machines.

Implementing a Zero Trust security model, which operates on the principle of "Trust No One, Always Verify," necessitates the validation of machine identities. This can be achieved using Public Key Infrastructure (PKI) certificates and cryptographic key pairs, which enhance the verification process and secure connections, even among entities outside of firewall protection.

Machine identity management is a broad term that encompasses numerous technologies such as SSH key management, SSL/TLS certificate management, etc. Realizing the significance of a machine identity management program requires comprehension of the program's objectives:

- Safeguard machine identities

- Keep up with the rapid development of machines.

- Spread cloud-based secure machines

- Protect the identity of Internet of Things (IoT) devices

To learn more about how your organization can protect and effectively manage machine identities, explore the Venafi Control Plane for Machine Identities.

Why Do You Need a Control Plane for Machine Identities?

Related posts

2024 Machine Identity Management Summit

Help us forge a new era of cybersecurity

Looking to vulcanize your security with an identity-first strategy? Register today and save up to $100 with exclusive deals. But hurry, this sale won't last!