In the past few weeks alone, companies like Anthropic, Microsoft and Tesla have shown us that AI innovations and transformations aren’t slowing down anytime soon.

If anything, they’re putting pedal to the metal—in Tesla’s case, quite literally.

In fact, at their “We, Robot” event in Burbank, California, Elon Musk unveiled the company’s latest advancements: new Optimus bots, Cybercabs and 20-passenger Robovans.

These futuristic release videos got me thinking about where AI is headed next. (And funnily enough, are making me consider a rewatch of the 2018 Upgrade film with some awfully familiar-looking company robo-cars.)

Upgrade (2018) vs. Tesla’s Robocabs and Vans, which can be expected on streets near you by 2027

Because all that innovation points toward autonomous AI. In other words, the next stage of artificial intelligence: AI agents.

What is “agentic AI?”

Agentic AI, otherwise known as AI agents, is software that can interact with environments, gather data and use that information to perform tasks to meet goals set by humans.

The big shift? These AI analyze toolsets and data and determine the best course of action without human intervention.

How does agentic AI work?

AI agents typically use a four-step process that mimics human reasoning and action.

- Researcher: In the first stage an AI acts a bit like a detective, pulling data from various sources like databases, websites or other repositories. During this stage, the AI will identity key features and recognize patterns.

- Strategist: During this stage, the AI agent uses an LLM to oversee tasks, conjure up solutions or task sub-agents, all while ensuring outputs are accurate and relevant.

- Actor: Not in the Oscar-winning sense, mind, but in the sense of springing into action. Think of the agent as an assistant capable of quickly and precisely executing tasks on its own, while noting down any major tasks for human intervention.

- Learner: This is where machine learning comes into play. Once tasks are completed, an AI agent will review and analyze its work and the results to refine performance over time, just the way a human does.

Why is this such an important milestone in AI advancement?

You’re of course familiar with AI in its generative function: ChatGPT, Claude, Gemini, Venafi Athena and countless others. But AI agents do more than generate text, images, videos and code. They interpret questions and take action through machine learning and natural language processing.

What’s more, they can improve their performance based on that learning to bolster efficiency, improve task and customer satisfaction, consistently automate and scale workflows, and provide valuable data and insights through the tasks they cross off their (self-developed) list.

This is important to AI advancement because these leaps in intelligence are accelerating faster than expected. Just look at where we all were two years ago, with the then-nascent ChatGPT-3 and frankly nightmare-inducing videos of Will Smith eating spaghetti.

We’ve come a long, long way since then. But there are still miles to go.

The Generative AI Identity Crisis: Emerging AI Threatscapes and Mitigations

The 5 stages of artificial intelligence

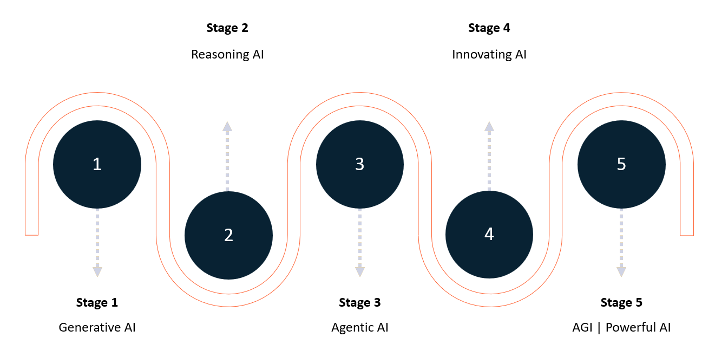

Earlier this year, OpenAI’s relayed their new vision for the future of AI, delineated by 5 stages.

Stage 1 and 2: The road so far

Stage 1 is demarcated by the generative AI models we all know and use today, capable of producing multi-modal content through conversational prompting and iteration. They’re powerful, but far from perfect.

Stage 2 refers to reasoning AI models, which take a moment to “think” through a problem or scenario before responding. One such example of this is OpenAI’s o1 model (formerly known as Strawberry).

These systems are less so meant for writing and design (like those referenced in Stage 1) and are more suitable for complex tasks in science, coding, and math. You can learn about OpenAI o1 here.

Stage 3: You are *here*

Stage 3 is where AI agents enter the picture. Overall, it’s where the industry seems to be right now—with companies like Salesforce, HubSpot, Simbian and many others having launched their own agentic tools in the last few months. In fact, just yesterday, Anthropic announced “Computer Use,” in which their Claude 3.5 Sonnet model can look at a computer’s screen, use the cursor, click buttons and even type text—just as humans do. Though still rudimentary, this development is a first for the industry.

And for those companies that haven’t released theirs, it’s where they’re headed next. Earlier this week, Microsoft announced that the ability to create autonomous agents will be available within Copilot studio in the coming month. And Sam Altman, the CEO of OpenAI, predicts OpenAI’s agents are on target to launch in 2025. Both companies see the ability to scale teams and capabilities beyond just reasoning through ideas, but performing independent actions.

Altman’s—and others’—greatest concerns? Ensuring these agentic systems don’t go rogue, while allowing their teams to capitalize on the endless possibilities of incorporating agents.

Agentic AI: Real-world applications

As long as implementation is done thoughtfully and strategically, the possible applications of agentic AI will be endless.

- Customer support: Enhancing the ability to handle high volumes of inquiries, improve self-service, automate routine tasks and improve customer satisfaction.

- Sales and marketing: Driving personalized customer engagement through targeted campaigns and streamlined communication channels powered by AI insights.

- E-commerce: Optimizing shopping experiences with AI-driven recommendations and customer insights.

- Trading: Automating market analysis and decision-making processes.

- Coding and Software Development: Automating repetitive coding tasks, enabling developers to concentrate on complex challenges.

- Vehicles: Enhancing autonomous driving capabilities and improving vehicle safety.

- Manufacturing: Streamlining production processes and predictive maintenance, reducing downtime and ensuring efficient resource management.

Stages 4 and 5: The road ahead

Stage 4 refers to AI systems that can aid in invention. You could argue that GPTs are already helping in this way through ideation and concepts, but “Inventors” will specifically be able to help “prototype, build and manufacture physical products.”

Finally, Stage 5 is when AI becomes advanced enough to work on behalf of an entire organization. Dario Amodei, CEO of Anthropic, calls this “powerful AI.”

It’s what several others refer to as “artificial general intelligence,” which can perform tasks at capacities that match or surpass the abilities of humans. However, the predictions for the arrival of AGI are a bit scattered, with some saying as early as 2028, and others by 2045.

The seemingly endless attack surface of agentic AI

I understand if that all feels a bit too Black Mirror for you, but AGI is still years off. The more pressing concern is that agentic AI Is here, now, and you may be wondering what to do to secure your enterprise while leveraging such a powerful step-up in AI.

Because we can’t talk about agentic AI without talking about the associated dangers.

AI agents fundamentally transform how AI interacts with its environment. They aren’t just accessing data, they’re acting on it, accessing applications, networks, services and APIs. From that, they make decisions, trigger actions and more—not to mention expand the AI threat surface in unprecedented ways.

The task chains of AI systems can be small, or they can take on a massive scale. The larger the system (or fleet of systems), the more potential entry points for malicious attackers or more opportunities for the system to misbehave.

That’s why companies need machine identities, so human operators can track which agentic systems are accessing which repositories, triggering certain actions or potentially operating outside parameters. Without oversight into the agent’s task chain, enterprises are at risk of data exfiltration, denial of service, unauthorized access and even supply chain attacks.

How to mitigate the dangers of AI agents

“If you think about where we are with generative AI … it’s not only helping businesses automate their processes faster, it’s generating content, generating code, and all the signs point to a near future in which it will be generating actual business actions.” – Kevin Bocek, Chief Innovation Officer at Venafi, a CyberArk Company

Fortunately, like any AI system, AI agents are machines comprised of code, and they can be protected through machine-to-machine authentication, which happens through automated machine identity security.

Given the vastness of AI agent applicability, human teams can’t keep up with the magnitude, but an automated solution can. And it can act as a rapid response kill switch for erratic, rogue or otherwise misbehaving AI models.

How does machine identity security work? It’s the discovery, orchestration and protection of machine identities, helping you fully authenticate, authorize or even deactivate AI systems—or even entire fleets of them, especially as agentic AI becomes more commonplace.

Rely on Venafi to protect your AI systems

While agentic AI presents several benefits to today’s enterprises, and innovation is only going to accelerate from here, there are still several risks to consider. Machine-to-machine authentication is the best way to ensure only trusted agents access your data and systems, and execute only approved actions.

Venafi’s Control Plane for Machine Identities delivers the most comprehensive solutions for monitoring and securing the machine identities that govern those connections, and it’s perfectly suited for even the most intricate AI systems.

By offering centralized visibility and automation, Venafi empowers your team to manage every type of machine identity, and our “Stop Unauthorized Code” solution effectively protects against malicious code execution in any operating environment.

Learn more at the link below.