In this article, I give an in-depth overview of service meshes. Clarifying the great benefits it offers as well as the extra complexity.

What is a service mesh, and what are Kubernetes service meshes?

It’s a simple question, yet it is embarrassingly hard to give a simple answer. This is because service meshes are a complex evolution of about a dozen networking, security and operational challenges that are encountered when deploying microservices at the “web scale” of Netflix, Lyft, Twitter or Google.

The catchword “Service Mesh” has been on every CTO’s radar starting with the launch of Linkerd v0.1.0 and Istio v0.1 (~2016-2017). The people at Buoyant, the creators of Linkerd, coined the term. In their own words the term has “taken a life of its own”.

A more accurate but very dull description would be that a service mesh is a “Common operational utility patterns for microservices, implemented through traffic interception and shaping”. This is certainly not as catchy or succinct as “Service Mesh”!

A service mesh can be used to get “golden signals” for your services, configuring automatic retries, setting timeouts, traffic shaping, authN/authZ and auto mTLS to increase your security posture.

Every request flow is typically intercepted and handled by a reverse proxy which is usually attached as a sidecar to each Kubernetes microservice that chooses to join “the mesh”.

Note: The sidecar model has its drawbacks and has led to other deployment strategies for mesh products, such as the Ambient service mesh mode for Istio, which was announced late last year. Despite the introduction of the ambient mode of the service mesh, sidecars are still here to stay. They will inter-operate with the ambient mesh's per node default deployment and therefore it will still be worthwhile to understand the reverse proxy sidecar model described below.

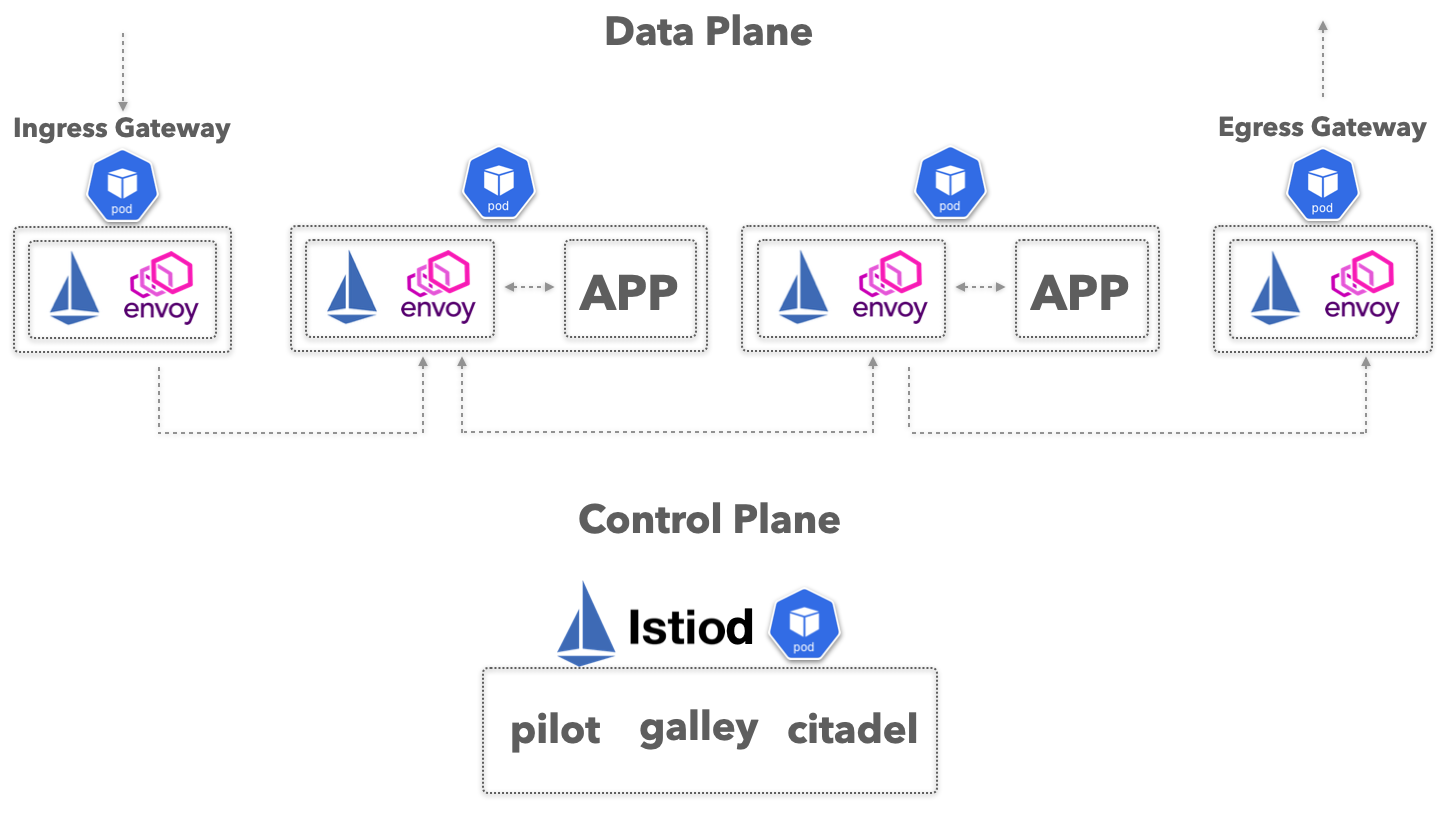

The reverse proxies are controlled by a central “control plane” which discovers all services in your cluster and pushes down configuration to the “data plane”. This configuration allows all services to “mesh” or communicate with each other. We will cover this configuration in more detail shortly. In Istio for example the reverse proxy technology is Envoy and you can see the traffic flow in the below diagram.

For a container orchestration platform like Kubernetes, you can see how service mesh adds a lot of direly needed features which were previously implemented in a bespoke manner by platform or site reliability engineers (SREs) through custom tooling and code. Without service mesh, these would be re-implemented over and over again by developers for each ecosystem, which is wasteful and slow. Service meshes can be a great help in turning Kubernetes into a developer platform, because Kubernetes is often not enough. In the words of Kelsey Hightower:

“Kubernetes is a platform for building platforms. It’s a better place to start; not the endgame.”

“https://twitter.com/kelseyhightower/status/935252923721793536?lang=en-GB”

We will quickly go through the high-level function of the control plane and elaborate a little bit on the data plane setup with the envoy proxy. After that understanding we will summarize the benefits and downsides of a “Service Mesh” implementation.

Control plane quick overview

The central control plane acts as a mesh controller (or pilot) and service registry. It presents a Custom Resource Definition (CRD) interface, through which you can apply any particular routing rules to the mesh. This routing is translated and distributed to the data plane proxies of the mesh.

For example you can instruct the proxies to redirect traffic going to a particular service to timeout after 5 seconds. Or you could live patch a particular host path, to remove a particular request header before that request reaches your service(s).

In Istio the controller or “brain” is called Pilot. It is communicating directly with the Envoy proxies to configure their routes and behaviour according to the configured Istio rules. Every envoy proxy living alongside each microservice is what we’ve been calling the data plane. The great power of Istio is leveraging the power and extensibility of the envoy project itself, so what are the capabilities of this famous envoy proxy?

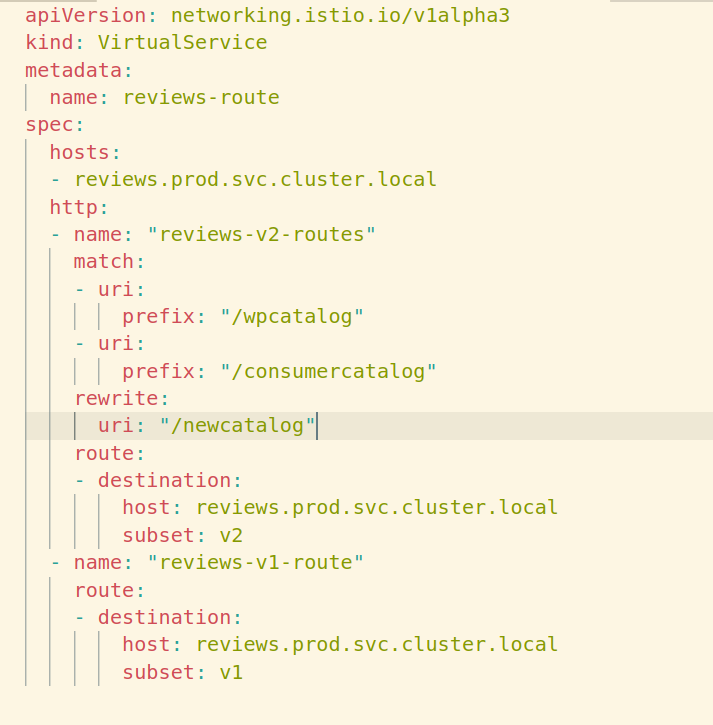

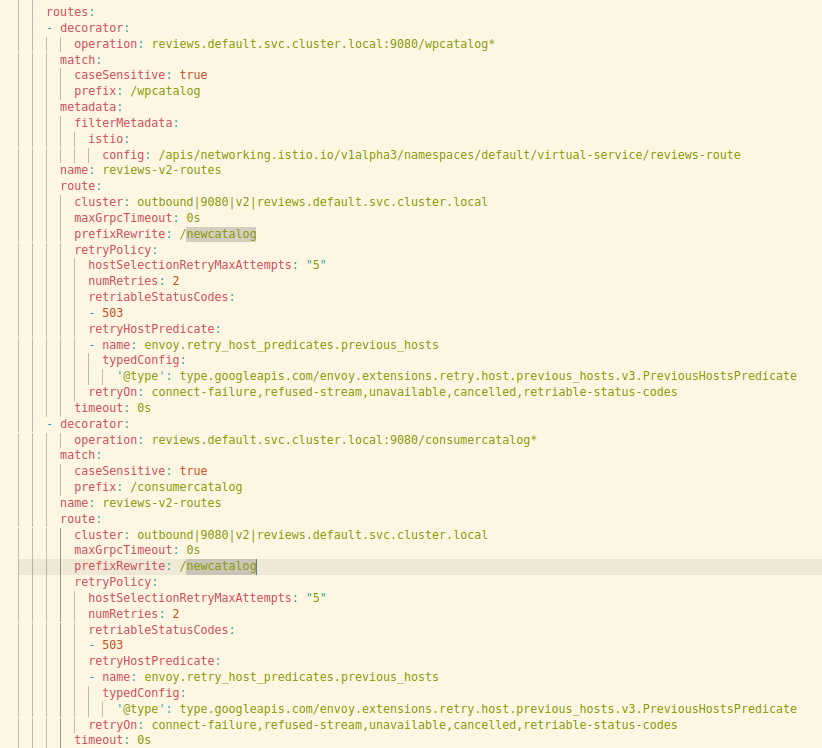

For example, a redirect for a particular path on a Virtualservice will transform into a particular envoy config:

In the above, we can see how the Virtualservice rewrite from /wpcatalog and /consumercatalog got transformed into multiple match rules with a rewrite in envoy speak. The code on the right has been retrieved from a sidecar proxy’s envoy “routes” configuration. The envoy proxy got this configuration pushed to it by the Pilot.

With the Istio training that we offer, we find it crucial to teach our clients the precise correspondence between mesh concepts like Gateway, VirtualService, ServiceEntry and DestinationRules, to envoy concepts such as Listeners, Routes and Clusters respectively. This aids the client in debugging complex routing scenarios within their mesh(s).

History of Envoy, the most ubiquitous data planes (reverse-proxy) used in service meshes

The envoy proxy is the fundamental building block of many meshes. It was designed by engineers who carried their learnings from an earlier library used at Twitter called Finagle, a JVM library that allows having a uniform set of patterns for observability or failover for any RPC service that used it as a server or client. The general resilience patterns (such as circuit breakers) were very popular generally in the Java community, with the Netflix Hystrix library as one example. Any JVM library solution is naturally constrained to the Java ecosystem. It is also difficult to keep track of all the added software libraries dependencies to make these features work. Matt Klein (who had switched roles from Twitter to Lyft) used the ideas from Finagle to reduce toil and repetition for every ecosystem at Lyft by building a reverse proxy technology called envoy. Envoy could be used to achieve the same capabilities at the network level, resulting in an out-of-process, out of JVM, technology-agnostic manner.

The reverse-proxy was also used as an ingress point at Lyft and was deployed on EC2 instances and as such not tied to the Kubernetes ecosystem at the time.

Linkerd had their 0.1.0 release in 2016 where they mention the Finagle influence clearly. They had built similar capabilities by leveraging their own Rust-based proxy. The founder, William Morgan was also an engineer at Twitter when they had these JVM RPC frameworks, such as the Finagle middleware.

Then came Istio, launched by Google, Lyft and IBM and initially focused on mTLS (first ever blog post link!). Istio built upon Envoy proxies by controlling a fleet of proxies through a control plane. That control plane was deployed inside Kubernetes and had facilities for a gradual approach to upgrade any service that wished to be part of “the mesh”, to unlock its benefits.

Aside from the main contender, a lot of the other meshes either use their own API gateway product as the data plane engine or are simply Envoy “drivers” so to speak.

How the “data plane” is hooked up

For most meshes, the traffic management happens through a sidecar to the main application running the proxy and some kind of iptables redirection to intercept all traffic and route it through the proxy. This of course exploits the fact that sidecar pods share the network namespace. The istio-proxy sidecar containers, containing the envoy server and istio-agent are what’s called the “data plane” for the mesh. In Envoy-based meshes, the proxies pull the configuration/addresses of the rest of the mesh microservices’ proxies by obtaining what is called in envoy-speak “xDS” dynamic configuration, a GRPC API interface to the control plane (hence why Pilot is called the control plane component).

An application pod can be marked to have a sidecar injected through an admission control such as MutatingWebhookConfiguration, one at a time. A whole Namespace can be targeted by labeling to be part of the mesh, which will amend any new pods with the required sidecar configuration. It is also possible to do the injection without the webhook if needed. The fact that iptables is currently leveraged for redirection is an implementation detail, there are other promising ways that the proxies interception can be accelerated that through eBPF are being developed and tested. This is not to be confused with completely new models of networking and performing service mesh capabilities purely in eBPF like the Cilium mesh.

In the above diagram, the sidecar container named “istio-proxy” is listening on port 15001 for inbound and 15006 for outbound. This means any connection coming into the productpage application will be passing through the 15001 port on which envoy is listening. Outgoing calls from productpage application will first hop through to the istio-proxy envoy port 15006. If you’re not afraid of iptables you can try to fully understand the redirection here.

Another key element of the data plane is the mTLS identity management by the istio-agent which is another process inside the istio-proxy container. This process does certain management tasks like providing Kubernetes secret discovery and requesting certificates for mTLS using your PKI.

When rooted in your enterprise PKI using istio-csr and cert-manager, the robust Kubernetes service account attestation by the agent gives a good basis for an automated secure identity of all mesh pods. Using istio-csr is currently one of the best enterprise-grade ways to implement multi-cluster in Istio. We will cover istio-csr integration in a future blog post.

When our experts are your experts, you can make the most of Kubernetes

What are the most common use cases for adopting the mesh seen in the wild?

So we’ve explained the plumbing and how it’s wired together and perhaps why it’s called a service “mesh”. I will now share with you what mesh features companies we work with are commonly using with their microservices.

1 - Mutual TLS (mTLS)

The most common benefit that you get immediately once you set this up is the ability to have automatically provisioned mutual TLS authentication, meaning every connection inside your cluster is automatically encrypted and the identities of your pods are verified. This sometimes means that you have to make your applications deliberately use an HTTP or plain TCP connection to allow Istio to upgrade the connection to TLS/HTTPS.

This alone has got the attention of many companies with stricter security guidelines as it improves the security posture towards the zero trust goal. The other popular zero trust mechanism that Istio provides is authorization policies, which are L7 aware and hence offer a richer set of constructs touching upon all aspects of a request to enact company policy. This can live alongside network policies or even replace them if perhaps your CNI does not provide Kubernetes NetworkPolicy support. Many other security controls are possible, like for example JWT authentication and egress controls.

2 - Metrics & Tracing

The next big advantage that you can start having is also automatic monitoring of high-level service indicators. Previously, this could be written as a Prometheus server middleware library, and will now be possible to do without any code as the mesh level sees every request that flows through the mesh. The mesh effectively becomes that middleware by bringing out the common metrics associated with service communication. Development teams now have a great baseline to monitor their service without building in custom metrics, libraries and having that maintenance overhead. On the observability front, most meshes have a wide array of integration with various tracing engines such as Jaeger & Open Telemetry. Tracing requires only a little adaptation by application teams to forward certain headers. This then exposes coarse but useful trace data to a tracing platform.

3 - Advanced Deployment Strategies

By having the ability to change traffic flow at the connection or packet level, Service Meshes allow for advanced traffic-shaping like automatic retries, timeouts or canary traffic splitting. These features can be leveraged directly by teams or they can be used by Continuous Delivery (CD) tooling to create more nimble continuous releasing processes. Two popular open-source tools for this use case are Flagger & Argo Rollouts.

The possibilities are almost endless. If the envoy configuration doesn’t allow you to do what you want natively, there are many extensibility points in a mesh like Istio. For example, you can add your own WebAssembly modules to process the request.

4 - Multiple Cluster Meshes

A lot of our clients are trailing the multi-cluster support from Istio. Especially the multi-primary, multi-network model as it can be used to merge non-peered clusters cross-cloud. This is usually pretty complex and only the most mature teams using Istio have managed to get this to work properly for them. We have a lot of experience with this and can help with getting your company up and running on this front.

Warning: here be dragons

Beware that we’ve only scratched the surface in terms of complexity. It was already tricky to explain the mesh operationally at a high level, but be prepared to:

- Spend some time learning the mountain of CRDs Istio introduces

- Navigating some tricky documentation and concepts like Virtualservice and Destinationrule

- Dealing with quirks or functionality shifts as Istio in particular has changed a lot since its first releases. The sad situation is that for any complex enough abstraction it will be leaky and for any non-trivial requirements. Applications teams are often forced down the path of understanding istio “inside out” including parts of envoy configuration which adds to the learning curve. This is why we have to go quite in-depth even to introduce the correct initial notions of what’s going on in the network when Istio is deployed.

It’s only worth it if you’re going to be heavily leveraging the existing functions or if you are ready to have some dedicated service mesh expert team members, it gets even more cumbersome to manage if you have loads of pods as you have to be prepared to try and optimize the memory usage. I am afraid the earlier statement about Istio was also a lie

“Istio is a platform for building your developer platform. It’s a much better place to start; if you can handle the complexity, but still not the endgame.” Houssem El Fekih 😛

Linkerd could help tame the complexity here depending on desired features, as it has fewer CRDs and concepts to learn at least.

Linkerd 2.0 boasts that it is a more user-friendly mesh without being an all-or-nothing proposition allowing incremental adoption, it also clearly states that it tries to cater to service owners instead of platform engineers, which in my opinion is a fair criticism of the complexity of Istio. Nevertheless, you should keep in mind that you do get less out of the box since Linkerd does not do ingress or egress management and has a single but excellent adaptive load balancing algorithm for example.

For any of these meshes that rely on these reverse proxies per application, we do incur a small latency penalty in the range of tens of milliseconds at the most so that might make it less desirable for ultra-low latency applications.

Watch this space

Over the next few months, I’ll be publishing a few more posts on how service mesh technologies can benefit various organizations. These technologies are becoming mature but there is a big knowledge gap and lots of details to tune when doing anything at a higher scale or with stricter security guarantees. At Jetstack we’ve developed a bespoke identity solution called istio-csr as a drop-in to Citadel that suits more hardened configurations rooted in your enterprise PKI. I’ll be posting about problems we saw in Istio at scale, and how istio-csr can be used to implement a multi-cluster setup for your organization among other things.

We are also watching the wider ecosystem of service meshes, and building experience in Linkerd and will be sharing back any knowledge on that front as well. We’re very interested in Linkerd’s simplicity and since the v2.2 release, no longer have any reservations about the mtls support it provides as it used to be permissive but now has strict mode.

So check back here now and then for the latest updates. Conversely, if you and your organization need help exploring the benefits of Istio, Linkerd or any other service mesh providers - we’re always happy to help. We provide training, consulting and a Kubernetes subscription service. We also offer Istio training with the possibility of custom-tailored workshops to suit our various clients' needs.