TL;DR

- DNS-based GKE endpoints change how public and private control planes can be accessed externally and internally in Google Cloud

- Private GKE endpoints with internal IPs can be accessed externally using the DNS-based endpoint, no longer requiring bastion hosts or VPNs for external connectivity

- IP-based public GKE endpoints can be secured by adding additional Cloud IAM authorization to external API Server requests

- Other cloud providers’ managed Kubernetes services don’t provide external connectivity to private endpoints

- Use it now with gcloud or Terraform on new and existing GKE clusters

Strengthen Your Resolve

When connecting to GKE clusters, an IP-based endpoint is used which can either be externally or internally available through public or private IP addresses. This provides direct access to the API Server in the control plane of the GKE cluster.

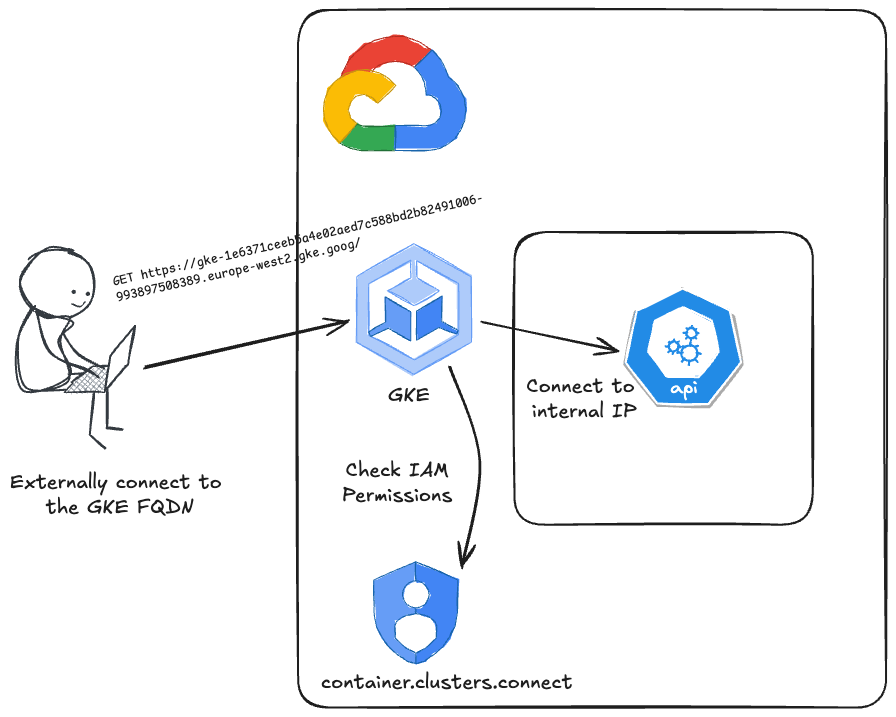

As an alternative approach to IP-based GKE endpoints, the GKE DNS-based endpoint provides access to the GKE cluster’s control plane using a cluster-unique fully qualified domain name (FQDN) instead of the IP address. This FQDN resolves to a Google Cloud IP address, not the GKE cluster’s IP endpoint. This is because the DNS endpoint is a Google Cloud service that receives requests before they are routed to the GKE endpoint. As such, this adds another layer of authorization through Google Cloud IAM and stops requests from being sent directly to the GKE control plane endpoint.

The DNS-based endpoint can be used with both public and private GKE endpoints. The FQDN will resolve to a Google Cloud IP before being routed to the GKE endpoint.

When adhering to security best practices for GKE deployments, access to the control plane should be restricted by disabling the public endpoint. This significantly reduces the attack surface by minimising access to the GKE endpoint to private networks and specific IP ranges.

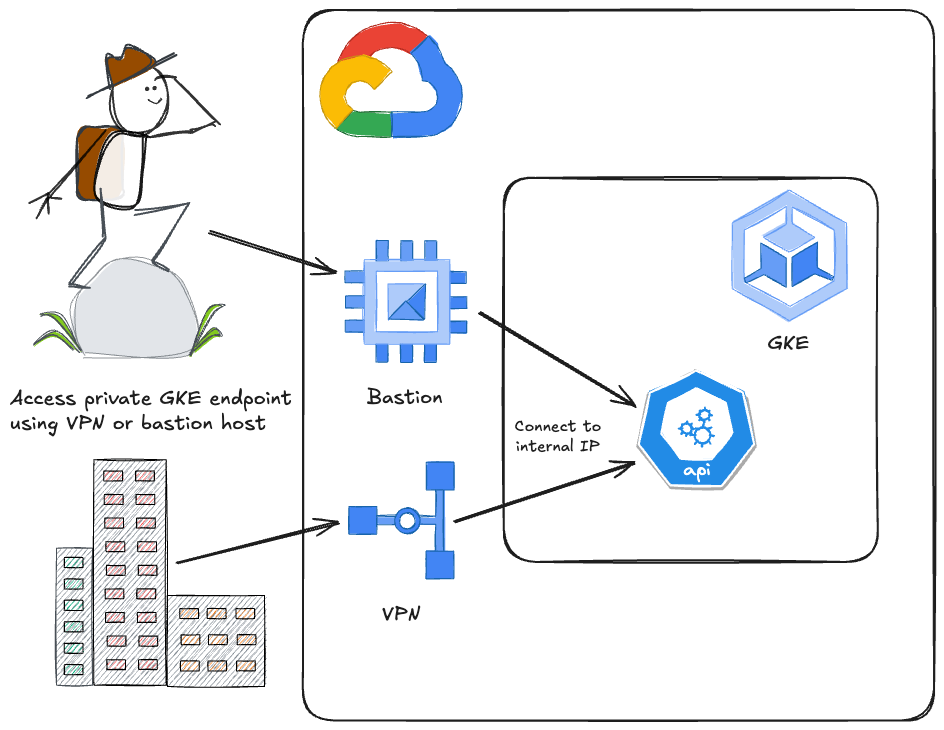

This leads to a long-standing architectural requirement of needing to be able to access the private GKE endpoint, either through a VPN, Direct/Partner Interconnect, or a bastion host. Providing this connectivity introduces additional infrastructure to maintain, and networking complexity to ensure that clusters are not externally accessible.

The crucial part of this is that it allows private GKE endpoints to be accessible externally, meaning GKE cluster endpoints that only have internal IP addresses can be accessed from outside the VPC network.

Previously, to access private GKE endpoints that only have internal IPs, bastion hosts or private networking was required in order to reach the control plane. Now, by using the FQDN, private GKE endpoints can be accessed externally through a Google Cloud API, removing the need for bastion hosts or other means of private connectivity to Google Cloud.

Pivotally, other cloud providers’ managed services for Kubernetes don’t offer the capability to securely access private cluster endpoints externally and require bastions or similar mechanisms to access the internal network. The point of difference for GKE DNS-based endpoints is that the private cluster endpoint can be accessed without having to connect to the internal network.

Often access to clusters is required by administrators to maintain clusters, and for CD push-based deployments, for example from GitHub Actions. In this case, publicly accessible self-hosted GitHub Runners must be deployed into a Google Cloud VPC (eg. using terraform-google-github-actions-runners) which then has network connectivity to the private GKE endpoint. This incurs yet more operational overhead, infrastructure costs and potential attack vectors.

Removing this infrastructure and instead authenticating against Google Cloud APIs to access private GKE clusters ensures that these overheads are mitigated, similar to how using Google Cloud Identity-Aware Proxy for TCP tunneling removes the need for a bastion to SSH or RDP to private GCE instances.

To take a closer look at the benefits of the GKE DNS-based endpoint, we’ll see how using the FQDN adds an additional layer of authorization to requests sent to the GKE endpoint, as well as how this can change public and private IP-based endpoints.

Authorization

Requests sent to the public or private IP-based GKE endpoints are routed directly to the Kubernetes API Server in the cluster’s control plane. Access can be restricted to specific IP ranges by setting master authorized networks.

When using a DNS-based GKE endpoint, requests sent to the cluster’s FQDN are first routed through a Google Cloud API before reaching the Kubernetes API Server in the GKE cluster. By using the FQDN, Google Cloud can apply authorization checks to requests before they reach the GKE endpoint.

This allows access to be further restricted through IAM Roles, or more specifically by granting the container.clusters.connect IAM permission. This IAM Permission can be granted to all principals; Service Accounts, Users or Groups.

Let’s see how IAM can restrict access to the DNS-based GKE endpoint using service accounts and different IAM Roles.

First, grant the gke-priv-access service account to the container.developer IAM Role which includes the container.clusters.connect IAM permission.

$ gcloud projects add-iam-policy-binding jetstack-paul --member=serviceAccount:gke-priv-access@jetstack-paul.iam.gserviceaccount.com --role=roles/container.developer --condition=NoneTo use the FQDN as the GKE endpoint, credentials are retrieved using the --dns-endpoint option.

$ gcloud container clusters get-credentials example-auto-priv --dns-endpoint --location europe-west2As the gke-priv-access user, we can use the DNS-based endpoint using the FQDN.

$ kubectl auth whoami -v=7

I1108 11:03:54.864830 54231 round_trippers.go:463] POST https://gke-1e6371ceeb5a4e02aed7c588bd2b82491006-993897508389.europe-west2.gke.goog/apis/authentication.k8s.io/v1/selfsubjectreviews

ATTRIBUTE VALUE

Username gke-priv-access@jetstack-paul.iam.gserviceaccount.com

Groups [system:authenticated]

$ kubectl cluster-info

Kubernetes control plane is running at https://gke-2411e22b5d664bcb91b94c08782e27265bb6-993897508389.europe-west2.gke.googHowever, using the gke-no-access IAM service account (which doesn’t possess the container.clusters.connect permission) we can see that it cannot access the private GKE endpoint using the FQDN.

$ kubectl cluster-info

E1108 11:09:58.905245 38574 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: request is rejected, status: \"Permission 'container.clusters.connect' denied on resource \"gke-1e6371ceeb5a4e02aed7c588bd2b82491006-993897508389.europe-west2.gke.goog\" (or it may not exist)\""Using the DNS-based GKE endpoint allows for additional layered security, requiring requests to be authorized using IAM Permissions before reaching the Kubernetes API Server in GKE where RBAC is then applied. We’ll see in a later section why this is a particularly important security benefit especially when using public IP-based GKE endpoints.

The DNS-based GKE endpoint provides an alternative to IP-based endpoints when connecting to GKE control planes. This poses significant security and architectural benefits for both public and private GKE cluster endpoints. By providing external access to private GKE endpoints, and also improving the security of public GKE endpoints, DNS-based endpoints could fundamentally change how we architect and deploy GKE and Google Cloud networking.

Let’s take a look at how DNS-based GKE endpoints can improve both private and public GKE cluster endpoints for greater security and reduced networking complexity.

Private GKE Endpoints

Following best practices for GKE security, IP-based GKE endpoints should be private, with an internal IP assigned to the Private endpoint that is routable within the private network. As this endpoint is therefore not publicly accessible, a connection to the VPC network is required which usually leads to bastion hosts or VPN connections.

Instead, when using the DNS-based GKE endpoint for private clusters, direct connectivity to the VPC network is not required, removing the need for bastion hosts or VPN connections.

Creating a GKE cluster with a private endpoint (or updating an existing cluster), gcloud uses the --enable-dns-access flag to create an FQDN for the cluster endpoint. The cluster endpoint can still either be public or private, however, the benefit of a DNS-based GKE endpoint is that a private endpoint can be reached externally. We will see how creating a private GKE cluster and using the DNS-based endpoint will allow external access while the endpoint will have a non-routable internal IP.

Here, we are creating a GKE Autopilot cluster with a private endpoint, but GKE Standard clusters can also use DNS-based endpoints.

gcloud container clusters create-auto example-auto-priv \

--enable-dns-access \

--enable-private-nodes \

--enable-private-endpoint \

--location europe-west2 \

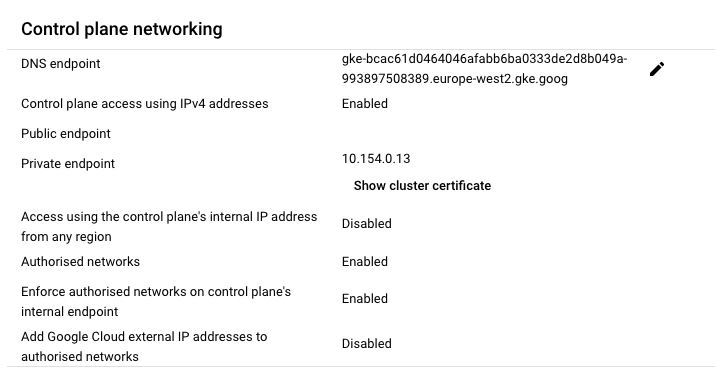

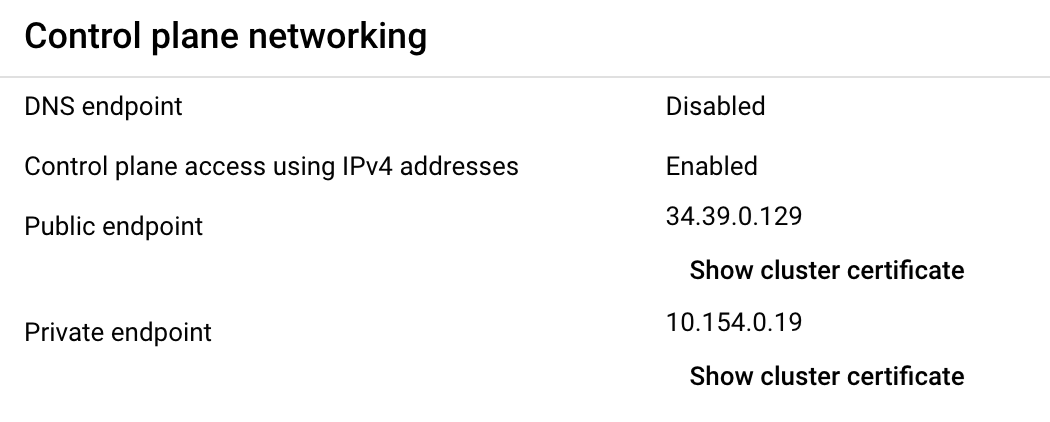

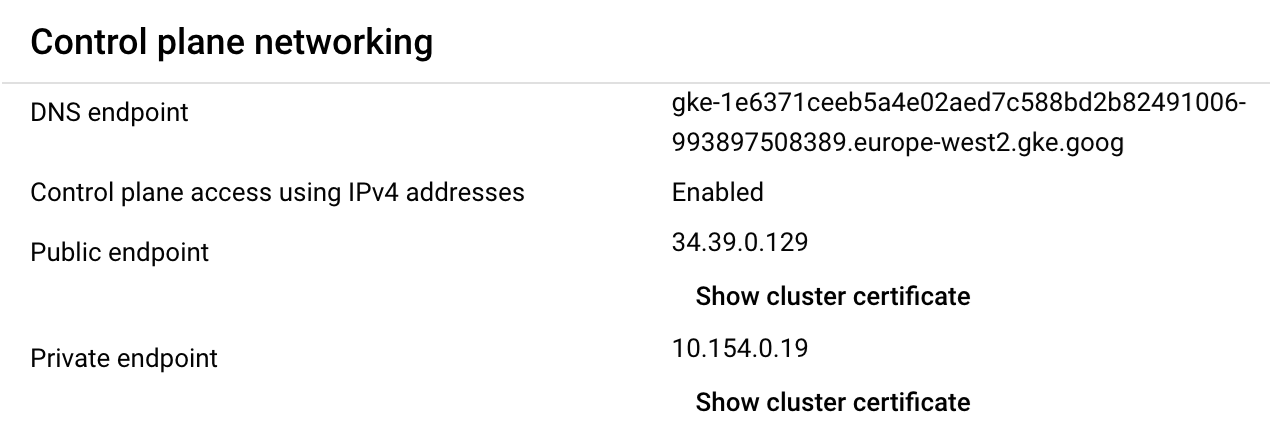

--enable-master-authorized-networksIn the console, the cluster shows that an FQDN has been created for the GKE endpoint, and there is no external IP for the Public endpoint, but there is an internal IP for the Private endpoint.

gcloud also shows that the endpoint has an internal IP and the FQDN has been created.

$ gcloud container clusters describe example-auto-priv --location europe-west2 --format="value(endpoint)"

10.154.0.13

$ gcloud container clusters describe example-auto-priv --location europe-west2 --format="value(controlPlaneEndpointsConfig.dnsEndpointConfig.endpoint)"

gke-bcac61d0464046afabb6ba0333de2d8b049a-993897508389.europe-west2.gke.googLooking at what the FQDN resolves to shows it’s a public Google Cloud IP address.

$ dig +short gke-bcac61d0464046afabb6ba0333de2d8b049a-993897508389.europe-west2.gke.goog A

216.239.32.27When trying to access the endpoint externally with no private connectivity set up to Google Cloud, using the internal IP will unsurprisingly not work.

$ gcloud container clusters get-credentials

example-auto-priv --location europe-west2

Fetching cluster endpoint and auth data.

kubeconfig entry generated for example-auto-priv.

$ kubectl config view -o jsonpath='{.clusters[?(@.name == "gke_jetstack-paul_europe-west2_example-auto-priv")].cluster.server}'

https://10.154.0.13

$ kubectl get ns --request-timeout 30s

E1105 13:00:42.252633 19320 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://10.154.0.13/api?timeout=30s\": context deadline exceeded (Client.Timeout exceeded while awaiting headers)"Instead, retrieving the FQDN for the GKE endpoint and routing requests to Google Cloud will allow external connectivity to the internal IP.

$ gcloud container clusters get-credentials

example-auto-priv --dns-endpoint --location europe-west2

$ kubectl config view -o jsonpath='{.clusters[?(@.name == "gke_jetstack-paul_europe-west2_example-auto-priv")].cluster.server}'

https://gke-bcac61d0464046afabb6ba0333de2d8b049a-993897508389.europe-west2.gke.goog

$ kubectl cluster-info

Kubernetes control plane is running at https://gke-bcac61d0464046afabb6ba0333de2d8b049a-993897508389.europe-west2.gke.googThis is a significant benefit to running private GKE clusters for several reasons. It removes the need for external access to the private network, whether that’s GitHub Runners hosted within Google Cloud that have private access to the internal GKE endpoint, or bastion hosts and VPNs that provide public access to the VPC.

Removing these networking components saves on operational overhead, and running costs of infrastructure, as well as mitigating an attack vector by removing a public route into the private network.

Next, using DNS-based endpoints offers an alternative way to externally access public GKE endpoints that enhance security through greater access controls and authorization.

Public GKE Endpoints

As previously mentioned, the recommendation is to have the external GKE endpoint disabled and only use the internal endpoint. This might not be feasible for some users who don’t have private connectivity to Google Cloud, so make their clusters externally accessible. Doing so poses potential security risks, which we’ll see can be mitigated by using a DNS-based endpoint.

When a GKE cluster with a public endpoint is created an external IP is assigned for the API Server which is globally accessible. This can be restricted by setting master authorized networks, which can be used to whitelist specified IP ranges that can access the cluster endpoint.

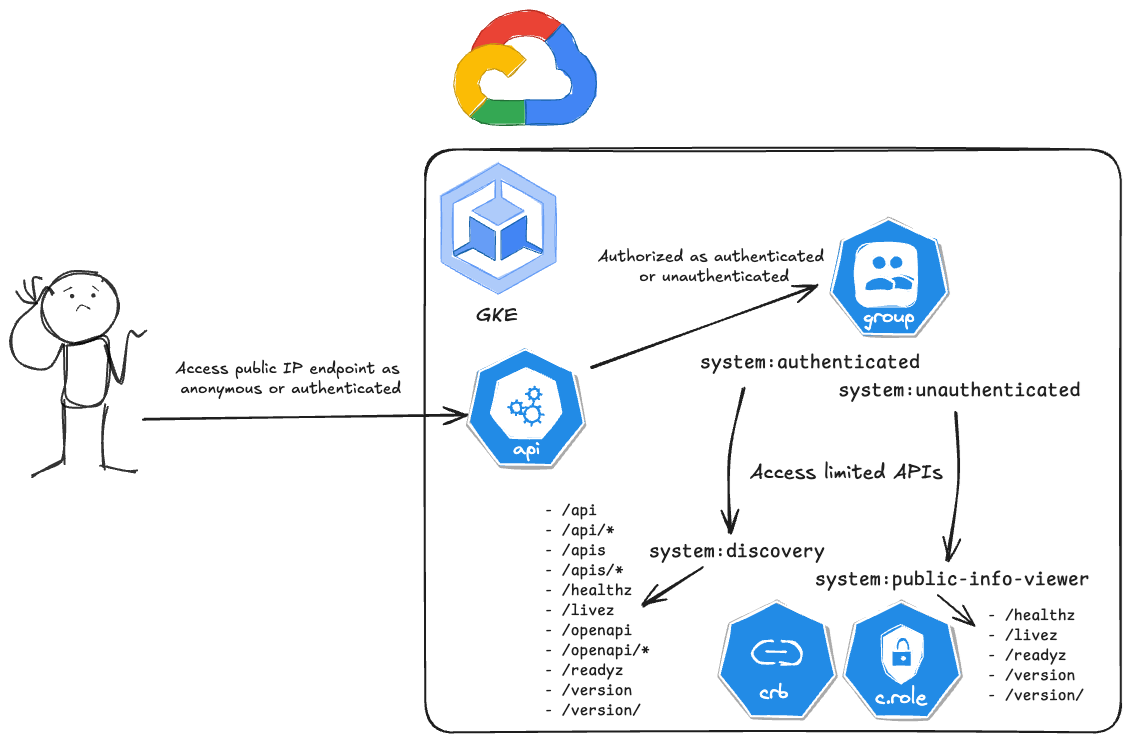

Depending on whether access to the endpoint has been restricted, the external IP routes directly to the Kubernetes API Server in GKE. Once requests reach the API Server, authorization is then enforced using RBAC.

Despite RBAC being applied to API Server requests, Kubernetes allows requests from anonymous (unauthenticated) and authenticated users, which are mapped to different ClusterRoles and ClusterRoleBindings. Importantly in this context, an authenticated user is anyone authenticated by Google OAuth, and not necessarily part of your Google Cloud Organisation.

What this means is anyone with access to the public cluster endpoint can send anonymous or authenticated requests and reach specific Kubernetes APIs. More on these ClusterRoles and ClusterRoleBindings can be found here.

Let’s take a look at how anonymous and authenticated users can access Kubernetes APIs using the IP-based external GKE endpoint, and then see how using a DNS-based endpoint can restrict access to improve the security of externally accessible clusters.

Firstly, our GKE cluster has both Public and Private endpoints enabled, and the DNS endpoint disabled. There are no restrictions on what IPs can access the Public or Private endpoints.

Using the Public endpoint, we can send anonymous (ie. unauthenticated) requests to the cluster and access the /healthz and /version APIs.

$ curl -k https://34.39.0.129/readyz?verbose

[+]ping ok

[+]log ok

[+]etcd ok

...

healthz check passed

$ curl -k https://34.39.0.129/version

{

"major": "1",

"minor": "30",

"gitVersion": "v1.30.5-gke.1355000",

"gitCommit":

"d16b6cc50798c61dc2740487abdaa656af180538",

"gitTreeState": "clean",

"buildDate": "2024-09-30T04:16:50Z",

"goVersion": "go1.22.6 X:boringcrypto",

"compiler": "gc",

"platform": "linux/amd64"

}Sending an authenticated request (from outside of the Google Cloud Organisation) grants access to the APIs included in the system:discovery ClusterRole.

$ kubectl auth whoami

ATTRIBUTE VALUE

Username paul@paulwilljones.dev

Groups [system:authenticated]

$ kubectl get --raw='/apis' -v=6 | jq -r .

I1107 13:17:18.183665 92132 round_trippers.go:553] GET https://34.39.0.129/apis 200 OK in 56 milliseconds

{

"kind": "APIGroupList",

"apiVersion": "v1",

"groups": [

{

"name": "apiregistration.k8s.io",

"versions": [

...

]

}

]

}What this shows is that when Public endpoints are used for GKE, authenticated and unauthenticated requests can be sent to the API Server to retrieve some level of information about the cluster. For some organisations, this could pose issues with security and compliance requirements around public availability.

As requests to DNS-based GKE endpoints are sent to Google Cloud APIs before reaching the Kubernetes API Server, an additional layer of authorization is added using Cloud IAM before RBAC is enforced in the cluster. This means that requests need to have the required Cloud IAM Permissions, therefore unauthenticated and authenticated requests do not reach the Kubernetes API Server.

Enabling the DNS endpoint for the cluster creates the FQDN that can be used to access the API Server.

Sending the same requests to the FQDN shows that the container.clusters.connect IAM Permission is required, showing that both anonymous and authenticated requests are unauthorized and do not get further than the Google Cloud API and don’t reach the Kubernetes API Server.

$ curl -k https:/gke-1e6371ceeb5a4e02aed7c588bd2b82491006-993897508389.europe-west2.gke.goog/version/

request is rejected, status: "Permission 'container.clusters.connect' denied on resource "gke-1e6371ceeb5a4e02aed7c588bd2b82491006-993897508389.europe-west2.gke.goog" (or it may not exist)"

$ kubectl get --raw='/apis'

Error from server (Forbidden): request is rejected, status: "Permission 'container.clusters.connect' denied on resource "gke-1e6371ceeb5a4e02aed7c588bd2b82491006-993897508389.europe-west2.gke.goog" (or it may not exist)"As we’ve seen, using the FQDN provides an additional layer of authorization in front of the Kubernetes API Server endpoint. However, if there is an external IP for the Public endpoint then requests can still be sent to the IP instead of the FQDN, thus circumventing the requirement for IAM Permissions.

Fundamentally, this raises the question of why a Public IP-based endpoint is needed at all if external requests can be sent to the DNS endpoint.

There are likely changes needed to client configurations to switch from using the public IP to the FQDN, but from a cluster perspective enabling the DNS endpoint can be done in-place on existing clusters.

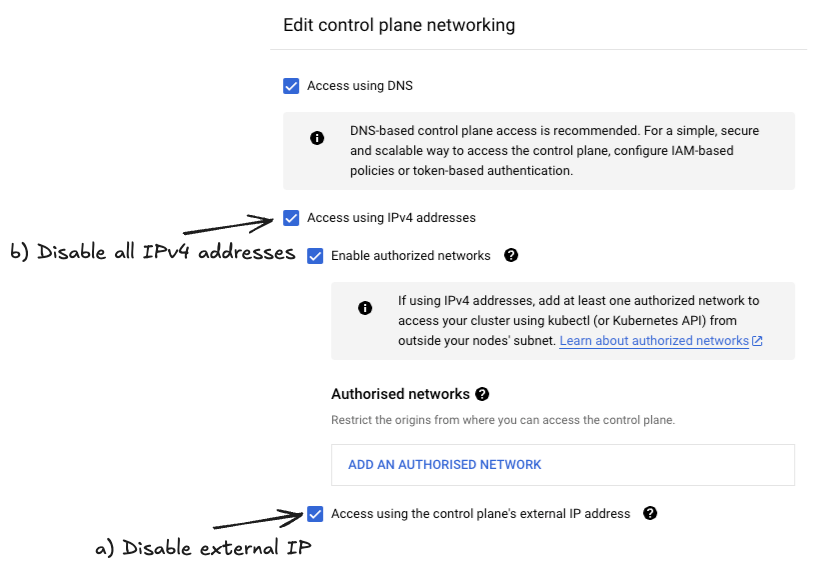

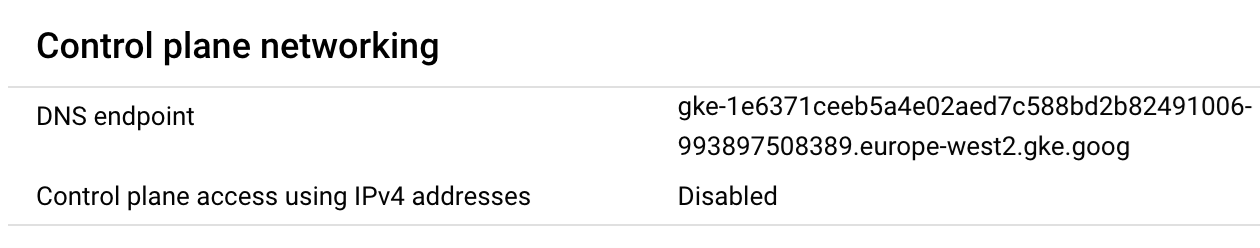

If a Public IP-based endpoint is deemed superfluous and a DNS endpoint is sufficient for external connectivity, there are two ways of changing existing IP-based GKE clusters. Either the external IP can be removed making the IP-based endpoint internal only, or removing all IPv4 addresses (external and internal) and the FQDN the only way to route requests to the GKE control plane.

When using public IP-based GKE endpoints, care must be taken to restrict direct external access to the GKE control plane. The DNS-based GKE endpoint similarly provides external access, but routes to a Google Cloud IP first and authorizes requests before they reach the control plane in GKE.

By using the DNS-based endpoint, public IP-based endpoints can either be removed or restricted even further to reduce the attack surface for external users.

Terraform

To create a GKE cluster with a DNS-based endpoint in Terraform, add the control_plane_endpoints_config attribute and set the allow_external_traffic flag.

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "6.11.0"

}

}

}

resource "google_container_cluster" "dns_private" {

name = "gke-priv-dns"

enable_autopilot = true

control_plane_endpoints_config {

dns_endpoint_config {

allow_external_traffic = true

}

}

master_authorized_networks_config {}

private_cluster_config {

enable_private_nodes = true

enable_private_endpoint = true

}

}Adding the control_plane_endpoints_config.dns_endpoint_config.allow_external_traffic is a non-destructive change, so can be changed in existing clusters.

The google_container_cluster.dns_private.endpoint output can then be used to access the cluster using the FQDN.

DNS-based GKE endpoints offer an alternative to IP-based endpoints when accessing the GKE control plane externally or internally. Regardless of whether the use case requires public or private connectivity to the GKE endpoint, DNS-based endpoints offer a more secure and less complex method for connecting to the GKE control plane.

Wrap Up

Having to invest and maintain the necessary infrastructure to enable connectivity to private resources causes operational overhead and an increased attack surface introduced by public instances like Bastion hosts and self-hosted GitHub Runners.

GKE DNS-based endpoints provide access to private clusters without the need for internal connectivity. IP-based restrictions and IAM permissions provide external mechanisms to access private clusters through Google Cloud APIs, whilst maintaining best practices for the security posture of GKE deployments.

The value proposition of GKE’s connectivity features alongside the wider portfolio of Identity-Aware Proxy services opens up new opportunities for architecting cloud-native solutions that decommission the legacy approach to perimeter-based security.

At Jetstack Consult, we often advise our customers on how to leverage Google Cloud’s offerings to modernise and rearchitect their cloud infrastructure to be secure by default and cost-optimised.

To hear more about GKE Workload Identity, check out blogs on No-code Workload Identity and Workload Identity Federation for Kubernetes Principals.